The most consequential conversations happening in boardrooms and engineering pods today are no longer about which large language model to choose, but about the sophisticated architectural frameworks required to make them truly work for the enterprise. As these powerful models move from isolated experiments into the very fabric of corporate infrastructure, the focus has decisively shifted. Simply plugging in a new LLM is not a strategy; it is a liability waiting to happen. The real challenge lies in building a resilient system around the model.

Selecting the right architectural pattern has become the paramount concern for achieving the reliability, scalability, and governance necessary in production environments. Without a coherent blueprint, even the most advanced model can produce unreliable outputs, fail to scale under load, or violate compliance mandates. This discussion will explore the essential patterns that form the backbone of modern, production-grade LLM applications, from data grounding and precision prompting to fully automated, agent-driven workflows.

Navigating the Shift from Standalone Models to Integrated AI Systems

The evolution of large language models has been rapid and transformative, moving them from the realm of academic curiosities to indispensable components of enterprise systems. Initially treated as black-box generators, LLMs are now understood as powerful but incomplete engines that require extensive integration to function effectively. This transition marks a critical turning point where the surrounding architecture—the data pipelines, the control mechanisms, and the feedback loops—is recognized as being just as important as the model itself. The consensus among leading practitioners is that long-term value is not unlocked by the raw intelligence of a model, but by the robustness of the system in which it operates.

This shift necessitates a deeper understanding of architectural design patterns. For organizations aiming to deploy AI solutions that are not only powerful but also trustworthy and maintainable, choosing the right combination of patterns is a foundational strategic decision. It dictates how the system will handle factual inaccuracies, interact with other enterprise tools, adapt to user needs, and adhere to safety protocols. In this new landscape, the architectural blueprint determines whether an LLM application becomes a sustainable competitive advantage or a costly, unmanageable risk.

Deconstructing the Architectural Blueprint of Modern LLM Applications

The Foundational Layer Grounding LLMs in Reality with RAG and Precision Prompting

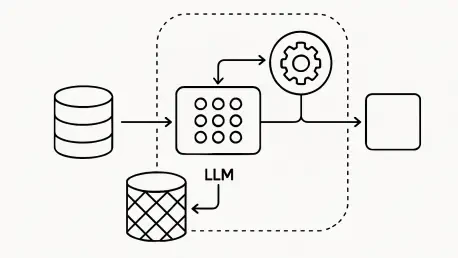

Across the industry, there is a clear consensus that Retrieval-Augmented Generation (RAG) has emerged as the definitive pattern for mitigating hallucinations and grounding LLMs in verifiable facts. By connecting a model to an external, curated knowledge base—be it internal company documents, a technical database, or real-time data feeds—RAG transforms the LLM from a probabilistic text generator into a fact-aware reasoning engine. This architecture allows the system to fetch relevant, up-to-date information before generating a response, ensuring that outputs are not only coherent but also accurate and attributable.

This approach stands in contrast to relying solely on a model’s static, pre-trained knowledge. While fine-tuning can adapt a model to a specific domain’s vocabulary and style, experts increasingly view RAG as the superior method for infusing dynamic, fact-checked intelligence. Complementing RAG are the critical techniques of prompt engineering. Methods like zero-shot, few-shot, and Chain-of-Thought prompting serve as the primary interface for instructing the model, guiding its behavior with precise instructions and examples. These techniques provide the granular control necessary to steer the LLM toward a desired outcome, making the combination of retrieval and precise prompting the bedrock of reliable LLM systems.

The Execution Layer Empowering LLMs to Act and Orchestrate Complex Workflows

The conversation around LLMs has moved beyond simple text generation to dynamic action, a leap made possible by agent-based architectures. This pattern empowers an LLM to function as a central orchestrator, capable of interacting with external APIs, querying databases, and utilizing other software tools to execute tasks. Instead of just answering a question, an agent-based system can book a flight, analyze sales data, or manage a customer support ticket. This transition from passive information retrieval to active task completion represents a significant expansion of what AI can achieve within an organization.

However, granting LLMs this level of agency introduces new operational risks that must be carefully managed. The consensus among architects is that robust supervision, validation, and error-handling mechanisms are essential, especially in high-stakes applications. Workflow automation patterns extend this capability, allowing LLMs to serve as the orchestrating brain for complex, multi-step business processes. For instance, an LLM could manage an entire content creation pipeline, from drafting an article and sending it for human review to incorporating feedback and scheduling it for publication. Successfully implementing these patterns requires a thoughtful balance between empowerment and control.

The Sophistication Layer Tailoring Intelligence with Adaptive and Multi-Modal Designs

As organizations mature in their AI adoption, the focus often shifts from general-purpose models to deeply specialized applications that deliver a distinct competitive advantage. A key strategy for achieving this is personalization, where the system adapts its responses based on individual user history, stated preferences, or immediate context. This creates a more relevant and effective user experience, turning a generic tool into a tailored assistant. Many developers are also exploring hybrid models, which combine the strengths of LLMs with traditional machine learning algorithms for tasks like classification or structured data prediction.

Furthermore, the barrier of text-only interaction is being dismantled by multi-modal systems. By integrating capabilities to process and understand images, audio, and other data formats, these applications can create far richer and more context-aware interactions. A system might analyze a user-submitted photograph of a broken part and an accompanying voice note describing the issue to generate a precise diagnosis and repair instructions. These sophisticated designs challenge the one-size-fits-all assumption, proving that the most powerful applications often arise from creatively combining different AI technologies to solve specific business problems.

The Governance Layer Building Trust with Continuous Improvement and Systemic Safeguards

For any LLM application to succeed in a production environment, it must be built on a foundation of trust and reliability. This is where governance patterns become non-negotiable. Industry leaders universally agree that comprehensive monitoring, robust user feedback loops, and explicit safety guardrails are essential components of any enterprise-grade AI system. Monitoring provides visibility into how the system is performing, logging queries, responses, and internal processes to enable debugging and compliance audits. Feedback loops create a mechanism for continuous improvement, allowing the system to learn from its mistakes and adapt over time.

In parallel, implementing systemic safeguards is crucial for managing risk. A key debate centers on reactive versus proactive measures. Reactive approaches, such as filtering inputs and outputs for inappropriate content, are a baseline requirement. However, a growing number of experts advocate for proactive strategies, such as pre-emptive policy enforcement that prevents the model from engaging with sensitive topics or performing unauthorized actions. Ultimately, the long-term value of an LLM application is not derived from its initial capabilities but from the operational architecture that ensures it remains reliable, compliant, and trustworthy throughout its lifecycle.

Assembling Your Architecture A Practical Guide to Implementation

The architectural patterns driving modern AI are best understood not as isolated choices but as interconnected building blocks. A truly resilient and effective system rarely relies on a single pattern. Instead, leading architects strategically layer these components to create a comprehensive solution where the whole is greater than the sum of its parts. This modular approach allows for flexibility and scalability, enabling teams to start with a foundational design and add more sophisticated capabilities over time.

Actionable best practices have emerged from successful deployments across various industries. A common and highly effective combination involves using RAG for factual grounding, an agent-based framework for tool use, and a continuous feedback loop for system improvement. For example, a customer service bot might use RAG to pull accurate policy information from a knowledge base, leverage an agent to access a customer’s order history via an API, and prompt the user for a thumbs-up or thumbs-down rating to refine future interactions.

This integrated approach provides a strategic framework for building value-driven AI systems. The key is to start by identifying the core business problem and then selecting the patterns that directly address its requirements for accuracy, action, and safety. By thoughtfully selecting, layering, and iterating on these architectural patterns, engineering teams can construct systems that are not only powerful but also observable, maintainable, and aligned with long-term business objectives.

Final Verdict Your Architecture Not Your Model Defines Success

The central conclusion drawn from years of enterprise AI deployments was clear: success hinged on the strategic selection of an architectural framework, not merely the acquisition of the most powerful model. The raw capabilities of an LLM, while impressive, proved insufficient without the systemic support of well-designed patterns for grounding, execution, and governance. It was the architecture that ultimately determined an application’s reliability, scalability, and trustworthiness in real-world scenarios.

As the field continued to advance, the core principles of modular, observable, and safety-aware design remained the unwavering cornerstones of effective AI implementation. The most successful organizations were those that recognized this reality early on. They invested strategically in building robust architectural foundations, an approach that significantly reduced operational risk and unlocked sustainable, long-term value from their AI initiatives. This focus on the system, rather than just the model, became the defining characteristic of mature and impactful AI adoption.