In today’s fast-paced digital landscape, businesses face immense pressure to deliver seamless user experiences, even when systems encounter unexpected failures, and this challenge becomes especially evident during high-stakes scenarios. Consider a situation where an e-commerce platform processes thousands of orders during a peak sale, only to have a single payment service outage cascade into a complete system breakdown, frustrating customers and costing millions in lost revenue. This challenge of maintaining reliability in distributed systems has become a critical concern for engineering teams across industries. Microservices, while offering scalability and flexibility, introduce complex interdependencies that can amplify the impact of localized issues. This guide aims to equip readers with the knowledge and tools to build fault-tolerant microservices using Kubernetes and gRPC, ensuring systems remain robust under stress. By following the detailed steps provided, a practical framework for resilience will be established, mitigating the risks of cascading failures.

The importance of fault tolerance in microservices cannot be overstated, as modern applications often span multiple services communicating over unpredictable networks, making them vulnerable to disruptions. A single point of failure in such an environment can disrupt entire workflows, affecting end users and business outcomes. This guide addresses these risks by combining the orchestration power of Kubernetes, the efficiency of gRPC for service communication, and proven resilience patterns like circuit breakers. Readers will gain actionable insights into designing systems that not only withstand failures but also recover gracefully, preserving operational continuity. The focus is on practical implementation, ensuring that theoretical concepts translate into real-world solutions for distributed architectures.

This comprehensive resource walks through each stage of creating a resilient microservices setup, from defining communication protocols to deploying scalable services. By exploring a hands-on example involving an Order and Payment service, the complexities of failure propagation will be tackled head-on. The step-by-step instructions ensure that even those new to these technologies can follow along and apply the principles to their own projects. Ultimately, this guide serves as a blueprint for engineering teams aiming to build systems that thrive in the face of adversity, maintaining user trust and system stability in high-stakes environments.

Unveiling Resilience in Microservices: Why Fault Tolerance Matters

Microservices have emerged as a dominant architectural style for building scalable and maintainable applications, allowing teams to deploy components independently and adopt diverse technology stacks tailored to specific needs. This modular approach enables rapid development cycles and facilitates scaling individual services based on demand, rather than overhauling an entire monolithic system. However, the distributed nature of microservices introduces significant operational challenges, particularly when it comes to ensuring reliability across interconnected components. Network latency, partial failures, and complete service outages pose constant threats to system stability, often leading to degraded user experiences if not addressed proactively.

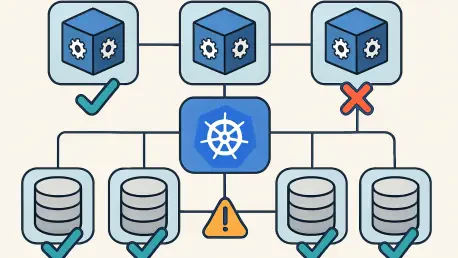

The risk of cascading failures in such environments looms large, where a single service disruption can ripple through dependent services, causing widespread downtime. This vulnerability stems from the intricate web of network calls that bind microservices together, making fault tolerance an essential consideration for any modern application. Without proper safeguards, a minor glitch in one area can escalate into a catastrophic outage, undermining customer trust and business objectives. Addressing this complexity requires a strategic blend of tools and patterns designed to isolate failures and maintain system integrity under duress.

Kubernetes, gRPC, and resilience mechanisms like the circuit breaker pattern form a formidable alliance in combating these challenges, offering a pathway to robust distributed systems. Kubernetes provides orchestration capabilities to manage service deployment and recovery, while gRPC ensures efficient, type-safe communication between services. Combined with circuit breakers, which prevent failure propagation by halting calls to struggling dependencies, these tools empower engineers to build architectures that endure real-world stresses. This guide offers practical takeaways on implementing these solutions, including a working Go-based example, to help readers craft systems capable of withstanding inevitable failures.

The Evolution of Distributed Systems: Understanding Failure Propagation

The transition from monolithic architectures to microservices over recent years marks a significant shift in software design, driven by the need for greater agility and scalability in application development. Monoliths, while simpler to manage in terms of failure scope, often became unwieldy as systems grew, hindering rapid updates and scalability. Microservices address these limitations by decomposing applications into smaller, autonomous units, each responsible for a specific domain. However, this decentralization introduces new risks, particularly due to the reliance on network communication, which can amplify the impact of failures across interconnected services.

A key concern in this paradigm is the phenomenon of cascading failures, where the downtime or poor performance of one service triggers a domino effect throughout the system, creating widespread issues. Consider an example where an Order Service depends on a Payment Service to process transactions; if the latter experiences delays or outages, the Order Service may hang or retry endlessly, consuming resources and eventually failing itself. Such scenarios illustrate how network dependencies transform localized issues into system-wide disruptions, affecting end users and downstream services alike. This dynamic underscores the necessity of designing for resilience from the outset, rather than treating it as an afterthought.

To counter these risks, modern resilience patterns like circuit breakers have become indispensable, building on lessons learned from earlier distributed system challenges. These mechanisms monitor failure rates and temporarily block calls to unreliable services, preventing resource exhaustion in dependent components. Tools like Kubernetes and gRPC further enhance this approach by providing robust orchestration and efficient communication frameworks, aligning with current industry demands for reliable, scalable systems. Together, they offer a foundation for mitigating failure propagation, ensuring that distributed architectures remain stable even under adverse conditions.

Crafting a Fault-Tolerant Architecture: Step-by-Step Implementation

Creating a fault-tolerant microservices system requires a methodical approach, integrating Kubernetes for orchestration, gRPC for communication, and resilience patterns like circuit breakers for failure isolation. This section provides a detailed roadmap to construct such a system, focusing on practical steps that ensure reliability and scalability. From defining service contracts to deploying on a Kubernetes cluster, each phase is crafted to build a cohesive architecture capable of handling real-world challenges.

The process centers on a sample setup involving an Order Service and a Payment Service, with gRPC facilitating interactions and a circuit breaker safeguarding against dependency failures. By following these steps, readers can replicate the implementation in their own environments, gaining hands-on experience with fault-tolerant design. The guidance is structured to be clear and actionable, ensuring that even complex concepts are accessible to a broad audience of developers and architects.

Beyond the technical setup, emphasis is placed on testing and validation to confirm the system’s resilience under stress. Each step includes considerations for fine-tuning configurations and adapting to specific use cases, providing a comprehensive framework for building robust microservices. This approach not only addresses immediate failure risks but also lays the groundwork for long-term system stability in production environments.

Step 1: Defining gRPC Service Contracts for Seamless Communication

The foundation of efficient microservices communication lies in well-defined service contracts, and gRPC offers a powerful mechanism through Protocol Buffers to achieve this. Start by creating .proto files for each service, such as order.proto for the Order Service and payment.proto for the Payment Service, specifying the structure of requests and responses. For instance, order.proto might define a PlaceOrder method with fields like product ID and quantity, while payment.proto outlines a ProcessPayment method with amount and payment method details, ensuring clarity in data exchange.

These .proto files leverage gRPC’s strong typing and binary serialization, which minimize overhead and enhance performance compared to traditional text-based formats like JSON. Once defined, these contracts can be compiled into code for multiple languages, enabling seamless integration across diverse technology stacks. A snippet from order.proto might include a service definition with request and response messages, providing a concrete blueprint for how services interact over the network.

Key Consideration: Ensuring Type Safety in Contracts

Precision in defining data structures within .proto files is critical to prevent runtime errors that could disrupt service interactions, ensuring smooth operation across distributed systems. By explicitly specifying field types and required attributes, mismatches between client and server expectations are avoided, reducing the likelihood of unexpected behavior. Regularly validating these contracts during development ensures consistency and reliability, forming a solid basis for fault-tolerant communication in distributed systems.

Step 2: Implementing the Circuit Breaker Pattern for Failure Isolation

To shield the system from cascading failures, integrating a circuit breaker pattern is essential, and in a Go-based implementation, the gobreaker library offers a reliable solution. Within the Order Service, configure the circuit breaker to monitor calls to the Payment Service, setting parameters like MaxRequests to 5 for trial requests in a half-open state, Interval to 60 seconds for failure rate measurement, and a threshold of 50% failure over 5 requests to trigger the breaker. This setup ensures that persistent issues in the dependency are detected and isolated promptly.

The circuit breaker operates by transitioning between closed, open, and half-open states based on observed success and failure rates, effectively preventing resource exhaustion in the calling service. When the breaker opens, further calls to the problematic service are blocked, allowing the Order Service to fail fast and maintain responsiveness. This mechanism is crucial for preventing a struggling dependency from dragging down the entire system, preserving overall stability during outages.

Configuration Tip: Balancing Sensitivity and Stability

Fine-tuning circuit breaker settings is crucial to strike a balance between detecting genuine failures and avoiding premature tripping due to transient issues. Adjusting thresholds and intervals based on historical service behavior ensures that the breaker reacts appropriately without overreacting to temporary glitches. Testing these configurations under varied load conditions helps identify optimal values, ensuring the system remains both responsive and protected against sustained disruptions.

Step 3: Designing Service Flow with Fallback Mechanisms

Designing the Order Service flow involves orchestrating the sequence of operations from receiving client requests to interacting with dependencies like the Payment Service. Upon receiving an order, calculate the total price by multiplying quantity by unit price, then invoke the Payment Service within the circuit breaker’s Execute method to process the transaction. If the payment succeeds, confirm the order status; if it fails due to the breaker being open or other errors, resort to a fallback status such as “Payment Pending” to inform the client of the delay.

Fallback mechanisms play a crucial role in maintaining user experience during service disruptions, ensuring that the system does not simply return errors but provides meaningful responses. This approach allows the Order Service to remain operational, even when dependencies are unavailable, by deferring critical actions until recovery. The response to the client should reflect the current state, offering transparency about the order’s progress while avoiding complete transaction failures.

Practical Insight: Crafting Effective Fallbacks

Developing effective fallback responses requires a deep understanding of user expectations and the business impact of partial failures, ensuring that systems remain reliable under stress. Designers should craft these responses to provide interim solutions, such as queuing requests for later processing or offering alternative actions, to maintain continuity. Testing fallback logic under simulated failure scenarios validates its effectiveness, confirming that users receive consistent feedback even when the system operates in a degraded state.

Step 4: Simulating Real-World Instability in Payment Service

To validate the fault tolerance of the system, introduce artificial instability in the Payment Service by adding a 200ms latency to each request and configuring a 25% random failure rate. This can be achieved in the service logic by inserting deliberate delays and using a random number generator to fail a subset of requests, mimicking real-world issues like network congestion or server overload. Such simulation ensures that the circuit breaker in the Order Service is tested under conditions that reflect production environments.

Observing the circuit breaker’s behavior during these simulated failures provides critical insights into its effectiveness at isolating issues and ensuring system reliability under stress. When the failure rate crosses the configured threshold, the breaker should open, preventing further calls to the Payment Service and triggering fallback logic in the Order Service. This controlled testing environment helps verify that protective mechanisms function as intended, safeguarding system stability against dependency failures.

Testing Note: Mimicking Production Failures

Realistic failure simulations are indispensable for assessing fault tolerance, as they replicate the unpredictable nature of production incidents and help prepare systems for real-world challenges. Vary the intensity and frequency of induced failures to cover a spectrum of scenarios, from intermittent delays to sustained outages. Documenting the system’s response during these tests informs future optimizations, ensuring that resilience strategies are robust enough to handle diverse challenges encountered in live deployments.

Step 5: Deploying Services on Kubernetes for Scalability

Deploying the Order and Payment Services on Kubernetes ensures scalability and operational resilience through declarative configurations in YAML manifests. Define deployments for each service, specifying replicas (e.g., 2 for Payment Service), container images, and port mappings, as illustrated in a sample payment-deployment.yaml with a service selector and TCP port 50051. Additionally, configure Kubernetes Services to enable discovery and load balancing, ensuring seamless communication between microservices within the cluster.

Kubernetes enhances fault tolerance through built-in features like health checks, which detect and restart unhealthy pods, and rolling updates, which minimize downtime during deployments. These capabilities ensure that services remain available even when individual instances fail, maintaining system uptime. Properly annotated manifests also facilitate monitoring and logging, providing visibility into service health and performance metrics for ongoing management.

Deployment Best Practice: Leveraging Auto-Scaling

Harnessing Kubernetes’ auto-scaling capabilities further bolsters resilience by dynamically adjusting the number of pod replicas based on CPU or memory usage. Configure Horizontal Pod Autoscalers to scale services in response to demand, ensuring resource efficiency while preventing overload during traffic spikes. Regularly reviewing scaling policies and resource limits optimizes performance, aligning the system’s capacity with real-time operational needs for sustained reliability.

Summarizing the Resilience Toolkit: Core Strategies at a Glance

The journey to fault-tolerant microservices hinges on a synergistic combination of tools and strategies, each addressing distinct aspects of distributed system challenges. gRPC contracts establish type-safe, low-latency communication, reducing serialization overhead and ensuring reliable data exchange between services. This foundation is critical for maintaining performance and consistency across network boundaries in complex architectures.

Circuit breakers serve as a vital defense mechanism, isolating failures by blocking calls to unreliable dependencies, thus preventing cascading issues that could destabilize the system. Kubernetes orchestration automates scaling, recovery, and deployment, providing self-healing capabilities that keep services operational under varying conditions. Additionally, failure simulation validates these resilience measures by mimicking real-world instability, confirming the system’s ability to withstand stress.

Together, these components form a robust toolkit for engineering teams, enabling the creation of architectures that prioritize stability and user experience. The interplay between efficient communication, failure isolation, and automated management ensures that microservices can handle disruptions gracefully. This summary encapsulates the core strategies, offering a clear reference for implementing fault tolerance in distributed environments.

Beyond the Basics: Adapting Fault Tolerance to Future Challenges

As distributed systems continue to evolve, the principles of fault tolerance discussed here remain adaptable to emerging industry trends and technologies. Service meshes, for instance, extend resilience by incorporating advanced retry policies, timeouts, and traffic management features, complementing the circuit breaker pattern. These tools provide fine-grained control over service interactions, addressing complexities in large-scale dependency graphs that are increasingly common in modern architectures.

Monitoring and observability solutions like Prometheus and Grafana further enhance fault tolerance by offering real-time insights into system performance and failure patterns. Integrating these platforms enables proactive identification of potential issues, allowing teams to refine resilience strategies based on actionable data. Such capabilities are essential for maintaining reliability as applications grow in scope and user demand, ensuring that systems remain responsive to dynamic workloads.

Looking ahead, challenges like managing intricate dependency networks and adopting new resilience patterns will shape the landscape of distributed systems. Staying abreast of advancements in container orchestration and communication protocols will be crucial for tackling these complexities. By building on the foundational approaches outlined, engineering teams can prepare for future scalability needs, ensuring that fault tolerance evolves in tandem with technological progress.

Forging Ahead: Building Resilient Systems with Confidence

Reflecting on the journey through building fault-tolerant microservices, the structured steps provided a clear path to mitigate the risks of cascading failures using Kubernetes, gRPC, and circuit breakers. Each phase, from defining service contracts to deploying on a scalable cluster, contributed to a robust architecture that withstood simulated disruptions. The hands-on implementation demonstrated how these tools worked in harmony to preserve system stability, offering valuable lessons in resilience design.

Moving forward, engineering teams were encouraged to enhance their systems by exploring additional patterns such as rate limiting to manage traffic surges and distributed tracing to pinpoint bottlenecks across services. Integrating these mechanisms strengthened the ability to handle production uncertainties, ensuring that user experiences remained unaffected by underlying issues. Experimenting with these strategies in varied scenarios further refined the approach to fault tolerance.

Ultimately, the process underscored that resilience must be embedded from the inception of microservices projects. By continuing to iterate on these foundations and adopting complementary tools like service meshes, teams positioned themselves to tackle unforeseen challenges with agility. The commitment to building architectures that thrived under adversity paved the way for sustained reliability and trust in distributed systems.