In the high-stakes world of corporate marketing, maintaining brand consistency is not just a preference; it is a fundamental requirement for building trust and recognition, yet the process of enforcing these standards has historically been a manual, error-prone, and resource-intensive ordeal. Marketing teams dedicate countless hours to meticulously reviewing PDFs, presentations, and other collateral, checking for minute details like logo padding, correct font usage, and precise color gradients against a comprehensive style guide. This bottleneck slows down campaign launches and introduces the risk of human error, potentially diluting brand identity. For developers, this presents a classic automation challenge, and with the recent advancements in multimodal large language models (LLMs) capable of processing both text and images, a powerful new solution has emerged. It is now possible to construct intelligent pipelines that can visually “see” a document, “read” a brand rulebook, and perform a comprehensive audit automatically, transforming a tedious task into a streamlined, efficient process. This article outlines a technical architecture for building such a brand compliance tool, exploring how the fusion of document parsing and advanced AI can revolutionize the design review workflow.

1. The Architectural Blueprint for Automation

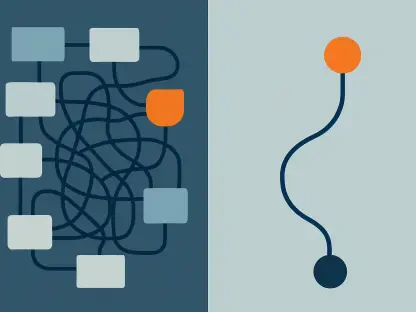

The primary obstacle in automating brand review is that a document is not merely a collection of text but a complex spatial arrangement of visual elements, including logos, images, and typography. A simple Optical Character Recognition (OCR) scan is insufficient because it fails to capture the visual context—where an element is located, its size, and its relationship to other elements on the page. To overcome this, a successful automation pattern relies on a two-stage pipeline: Detection → Verification. This approach first identifies and categorizes every relevant design element on the page and then, in a separate step, evaluates each element against the specific rules outlined in the brand guidelines. This separation of concerns ensures both accuracy and scalability, allowing the system to handle complex documents with a high degree of precision. By breaking the problem down into these two distinct phases, developers can create a robust and logical workflow that mirrors the human review process but executes it with the speed and consistency of a machine.

The foundation of this automated system is a carefully selected technology stack designed for rapid development and powerful AI integration. For the frontend, a framework like Streamlit is ideal for quickly building internal tools and dashboards that allow marketing teams to upload documents and review results with ease. The backend logic is best handled by Python, given its extensive ecosystem of libraries for document processing and machine learning. At the core of the system is a cutting-edge multimodal LLM, such as Gemini 1.5 Pro or GPT-4o, chosen for its ability to understand large context windows and process image inputs. These models are crucial for both the detection and verification stages. Finally, a critical component for ensuring reliability is the strict enforcement of JSON as the data format for all LLM outputs. By defining a rigid schema, the system can prevent the model from generating inconsistent or “hallucinated” data formats, making the pipeline’s output predictable and easy to parse for subsequent processing steps.

2. Stage One Identifying Design Elements

Before any compliance checks can occur, the system must first accurately locate and identify the design elements within a document. While traditional object detection models like YOLO are fast, they typically require extensive training on large, labeled datasets, which is impractical for bespoke elements like a specific company logo. A multimodal LLM with vision capabilities offers a more agile solution, enabling zero-shot or few-shot detection through sophisticated prompt engineering. This means the model can identify elements without prior training, simply by being given instructions and examples within the prompt itself. This stage begins with a critical pre-processing step. If the input is a PDF, it is not treated as a simple image. Instead, the page is rasterized into a high-resolution image for the LLM’s visual analysis, while simultaneously, vector metadata such as bounding boxes, text content, and color codes are extracted using libraries like PyMuPDF. This dual approach provides the LLM with a richer, more structured understanding of the document’s contents.

With the pre-processed data in hand, the system then constructs a detailed detection prompt. This involves feeding the LLM the rasterized page image along with a carefully designed prompt that instructs it to categorize all visual components into specific buckets, such as “logo,” “typography,” or “photography.” To ensure the output is structured and reliable, a strict JSON schema is provided within the prompt itself, defining the exact format the LLM must follow for its response. This prevents the model from deviating or providing unstructured text that would be difficult to parse. A key strategy for enhancing spatial accuracy is to pass the pre-calculated bounding boxes extracted during the pre-processing phase directly into the prompt’s context. By giving the LLM the precise coordinates of text and vector shapes, its ability to correctly locate and classify visual elements is significantly improved, forming a solid foundation for the subsequent verification stage.

3. Stage Two Verifying Compliance

Once the system has successfully identified and cataloged the design elements into a structured JSON list, the verification stage begins. In this phase, the user can select specific elements—such as a company logo or a headline—to be checked against the brand’s official guidelines. This is where the concept of retrieval-augmented generation (RAG) is applied to visual rules, transforming the LLM from a simple identifier into a sophisticated compliance auditor. It is not enough to ask the model a generic question like, “Is this logo compliant?” The system must provide the necessary context by retrieving the specific, relevant rules from the brand guideline document. For instance, if a logo is selected for verification, the system automatically pulls corresponding rules from the brand book, such as “The logo must have 20px of clear space padding on all sides” or “The logo must not be used on a red background.” These rules are then injected directly into the prompt sent to the LLM.

To handle the inherent subjectivity and ambiguity often found in design rules, the verification prompt must be engineered to encourage chain-of-thought reasoning from the LLM. Rather than asking for a simple “yes” or “no” verdict, the prompt instructs the model to first list its observations, explain its reasoning step-by-step, and only then deliver a final compliance judgment. For example, the model might output its observations: “The logo is placed on a background with HEX code #FF0000. It has 15px of padding on the top and bottom, and 25px on the left and right.” Following this, it would apply the rules: “The brand guidelines state the logo should not be used on red backgrounds and requires a minimum of 20px padding on all sides.” Finally, it would provide a clear verdict: “Compliance: False. Reason: The background color violates the color rule, and the vertical padding is insufficient.” This methodical approach not only improves the accuracy of the model’s assessment but also makes its decisions transparent and auditable for the end-user.

4. Navigating the Real World Limitations

Developing a brand compliance tool reveals important limitations in current AI capabilities that must be addressed with pragmatic engineering solutions. One of the most significant challenges is the “color perception gap.” While multimodal LLMs excel at understanding text and spatial layouts, they often struggle with precise color analysis. In practice, a model might misidentify a specific shade of navy blue as black or fail to accurately interpret the angle and composition of a color gradient. Early testing shows that accuracy for color and gradient verification can be significantly lower (e.g., 53%) compared to typography or logo placement checks (90%+). The most effective solution is to not rely on the LLM’s “eyes” for color. Instead, use Python libraries like Pillow or OpenCV to programmatically sample pixels from the element’s bounding box, extract their exact HEX or RGB color codes, and pass this structured data to the LLM as text metadata, allowing it to reason based on precise data rather than visual interpretation.

Another critical factor influencing the system’s accuracy is the format of the input file, with a notable performance difference between PDF documents and raw image files like JPGs. Processing PDFs consistently yields higher accuracy (often above 92%) compared to images (around 88%). The reason lies in the underlying structure of the files. PDFs are vector-based documents that contain rich, structural metadata, including font names, vector paths, and embedded color profiles. This information provides the system with a ground truth that can be extracted and fed to the LLM, greatly improving its inferential accuracy. In contrast, a flat image file like a JPG contains only pixel data, forcing the model to rely entirely on its own visual interpretation to identify fonts, shapes, and colors. The best practice, therefore, is to design the system to always prefer PDF uploads. If a user uploads a JPG or PNG, the interface should display a warning that the results may have a lower degree of accuracy due to the inherent limitations of processing non-vector formats.

A New Paradigm for Content Governance

The development of an automated brand compliance tool demonstrated a significant evolution in the application of artificial intelligence, shifting its role from merely a generator of content to a sophisticated governor of it. By strategically combining the advanced reasoning capabilities of multimodal large language models with the deterministic logic of code-based pre-processing and data extraction, developers were able to build powerful tools that successfully reduced manual review time by over 50%. The ultimate key to this success was not in blindly trusting the model’s output but in meticulously guiding its analytical process through structured prompts, strict JSON schemas, and programmatically verified data. This hybrid approach, which leveraged the strengths of both AI and traditional software engineering, established a new and effective pattern for automating complex, context-dependent enterprise workflows that were previously resistant to automation.