Today we’re joined by Vijay Raina, a specialist in enterprise SaaS technology whose work sits at the intersection of software architecture and machine learning. He’s here to pull back the curtain on Question Assistant, a feature designed to improve the quality of user contributions on Stack Overflow. This isn’t just a story about a successful product launch; it’s a candid look at the research, the setbacks, and the surprising pivots required to ship a truly effective AI system.

This conversation explores the journey of building a hybrid AI, starting with an initial concept that didn’t pan out and leading to a more nuanced solution. We’ll discuss how the team blended classic machine learning techniques with the power of modern generative models to create actionable feedback for users. Vijay will also share insights from the A/B testing process, where an unexpected discovery completely reshaped the project’s definition of success, and detail the production architecture that brings this all together in real time.

Your initial approach using only an LLM for quality ratings failed, partly due to a low Krippendorff’s alpha score on your survey data. Could you share the story behind pivoting from that idea to the successful hybrid model combining logistic regression indicators with Gemini for synthesis?

Absolutely. We started with what seemed like a straightforward idehave a powerful LLM rate question quality. We were optimistic, but the reality was messy. We defined three core categories—context, outcome, and formatting—and asked the model to score questions. The feedback it generated was generic, often repeating the same advice about library versions across all categories, and it frustratingly wouldn’t change even after a user revised their draft. To ground the model, we tried creating a “ground truth” dataset by surveying 152 of our experienced reviewers. The results from that were a real wake-up call; the Krippendorff’s alpha score was so low, it told us that even our human experts don’t agree on a simple numerical quality score. That was the moment we realized a number like “3 out of 5” is fundamentally not actionable. We had to pivot from trying to judge quality to providing specific, helpful guidance, which led us down the path of combining the strengths of different models.

You built individual logistic regression models for four “feedback indicators” like ‘Missing MRE’. Can you walk me through the data and training process? Specifically, how did you use past reviewer comments and TF-IDF vectorization to create reliable binary classifiers for each indicator?

Once we abandoned the idea of a single quality score, we looked at what our reviewers were actually doing. We realized they were leaving the same types of comments over and over. By clustering thousands of these real-world reviewer comments from Staging Ground, we identified four common, actionable themes: missing problem definition, lack of attempt details, no error logs, and the classic missing minimal reproducible example, or MRE. This gave us our four “feedback indicators.” We then tapped into our historical data—all those past reviewer comments and question close reasons—which was a goldmine. We treated this as a massive labeled dataset. For each indicator, we vectorized the question text using TF-IDF, a classic but incredibly effective technique for turning words into numerical features. We then fed these features into simple logistic regression models, training each one to answer a single, binary question: “Does this specific question need this specific type of feedback?” The result was four highly reliable, specialized classifiers instead of one confused generalist model.

The first A/B test in Staging Ground didn’t improve your primary metrics but revealed a +12% increase in question “success rates.” Describe the moment your team realized this. How did this unexpected finding change your perspective on the project’s value and goals?

That was a pivotal moment for the whole team. We launched the first A/B test in Staging Ground with clear goals: increase the rate of questions approved for the main site and decrease the time they spent in review. After running the experiment, we looked at the results for those primary metrics and… nothing. They were flat. There was this initial feeling of disappointment, like maybe we’d missed the mark. But we didn’t stop there. We started digging deeper into the data, looking at what happened to the questions after they left Staging Ground. That’s when we saw it: a significant, positive lift in what we call “success rate”—questions that stay open and get a real answer or a positive score. It was a consistent +12% boost. Realizing this felt like discovering we had built a tool that didn’t just speed up a review process, but fundamentally helped users ask better questions that the community could actually solve. It completely reframed our definition of success and validated that we were on the right path, even if it wasn’t the one we originally set out on.

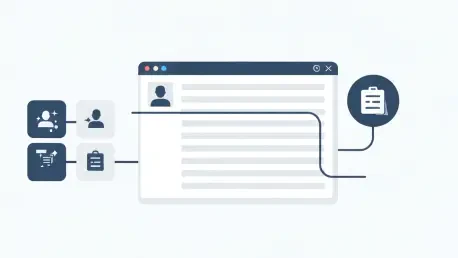

The article outlines a production flow from Databricks to Azure Kubernetes. Could you detail the real-time process that occurs when a user requests feedback? Please explain how a model prediction triggers the preloaded text and question to be sent to Gemini to generate the final response.

It’s a really smooth, two-step process that happens in seconds. First, our logistic regression models, which are trained in Databricks, are packaged up and deployed to a dedicated service running on Azure Kubernetes. When a user is drafting a question and clicks the button to get feedback, their question’s title and body are sent to this service. In real-time, all four of our binary classifiers make a prediction. Now, let’s say the “Missing MRE” model fires, returning a positive prediction. This is the trigger. The system doesn’t just show a canned message. Instead, it takes a pre-written text prompt we’ve crafted specifically for the MRE issue and bundles it together with the user’s actual question text. This entire package is then sent to the Gemini API. Gemini’s job is to synthesize these two pieces of information—our generic feedback and the user’s specific context—to generate a final response that is both actionable and highly personalized to what they’ve written.

What is your forecast for the role of hybrid AI systems, which combine traditional ML with generative models, in shaping the future of online content quality and user-generated knowledge platforms?

I believe hybrid systems are not just a temporary solution; they represent the most pragmatic and powerful path forward for platforms built on user-generated knowledge. Relying solely on large generative models for quality control can be like using a sledgehammer for a delicate task—they are incredibly capable but can lack precision, consistency, and can be difficult to steer reliably. On the other hand, traditional ML models, like our logistic regression classifiers, are fantastic at specific, well-defined tasks. They are reliable, fast, and we can train them to be experts at identifying very particular signals. The future, as I see it, is in using these two approaches symbiotically. We’ll increasingly use traditional ML to handle the initial, structured analysis—the “what” and “where” of a problem. This layer provides the guardrails and the specific triggers. Then, we’ll use generative AI for the final mile—the synthesis, the nuanced communication, the “how.” This fusion allows us to build systems that are not only scalable and efficient but also deeply contextual and genuinely helpful, ultimately making it easier for everyone to contribute valuable knowledge.