The recurring thirty-minute delay caused by a simple Terraform pipeline failure represents one of the most persistent and costly interruptions in modern software development, directly impacting project timelines and engineering morale. This research summary outlines a proof-of-concept system that challenges this inefficiency by automating the analysis and remediation of these common failures. The core objective is to eliminate the significant engineering time wasted on manually diagnosing and fixing repetitive errors, such as missing variables or syntax mistakes. By automatically detecting failures, generating correct code fixes, and creating pull requests for human review, the system introduces a new paradigm for accelerating the development lifecycle. This approach transforms a tedious, manual process into a streamlined, automated workflow, allowing developers to reclaim valuable time for innovation.

Automating Terraform Pipeline Remediation with an AI-Powered System

This investigation centers on a proof-of-concept system designed to automate the analysis and remediation of frequent Terraform pipeline failures. The central challenge addressed is the significant expenditure of engineering time on manually diagnosing and resolving repetitive errors, including common issues like missing variables or simple syntax mistakes. The system’s architecture is engineered to automatically detect these failures as they occur, analyze the root cause, and generate precise code fixes.

The ultimate goal is to present these generated solutions as pull requests that await human review, thereby dramatically speeding up the development lifecycle. This model doesn’t seek to remove developers from the process but rather to augment their capabilities. By handling the initial, time-consuming steps of troubleshooting, the system allows engineers to apply their expertise at the most critical stage: final validation and approval, ensuring both speed and safety are maintained.

The 30 Minute Problem The High Cost of Repetitive Pipeline Failures

In typical development workflows, a single Terraform pipeline failure can halt progress for an average of thirty minutes. This period is consumed by a series of manual, often frustrating, tasks. An engineer must first scan extensive logs to pinpoint the error, then invest time in understanding its root cause, followed by writing a corrective code patch and finally testing the change to ensure it resolves the issue without introducing new ones.

This recurring interruption represents a significant drain on engineering productivity. When multiplied across teams and projects, these small delays accumulate into a substantial loss of time that could otherwise be dedicated to feature development and innovation. Consequently, solving this problem is not merely an exercise in incremental efficiency; it frees up developers to focus on building value rather than performing repetitive troubleshooting, directly impacting a company’s ability to deliver products faster.

Research Methodology Findings and Implications

Methodology

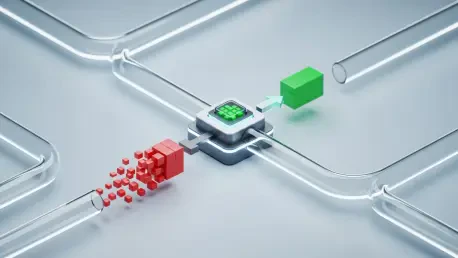

A hybrid system was developed, triggered by Azure DevOps webhooks, to address pipeline failures with a multi-layered approach. Its core logic prioritizes a fast and cost-effective pattern detection engine to identify and classify known, common failures instantly. This deterministic first pass handles the majority of predictable errors without needing more advanced analysis. For novel or unrecognized errors that fall outside these predefined patterns, the system escalates the issue to a GPT-5.2 model for deeper, more nuanced investigation.

This tiered decision logic is governed by confidence scores, which determine the appropriate action. Based on the assessed certainty of the diagnosis, the system decides whether to generate a complete code fix, offer actionable suggestions for manual implementation, or flag the issue as requiring direct human intervention. This strategic approach ensures that most failures are handled immediately and cheaply, while complex cases receive the benefit of intelligent analysis, optimizing for both speed and accuracy.

Findings

The system demonstrated a remarkable 93% reduction in remediation time across three distinct test scenarios, cutting the average process from a manual thirty minutes down to just two. It achieved 100% detection accuracy for all tested failures, successfully identifying every issue triggered. In two of the three scenarios—a missing environment variable and an incorrect region specification—the system generated merge-ready code that, upon approval, successfully resolved the pipeline failure without any modification.

In the third scenario, which involved a more complex syntax error, the system correctly identified the need for manual intervention. Instead of generating a potentially breaking change, it provided actionable suggestions to guide the developer toward the correct solution. Notably, the pattern detection engine successfully handled all three test cases without ever needing to escalate to the more resource-intensive AI model, highlighting the effectiveness of the hybrid approach in managing common, predictable errors.

Implications

The primary implication of this research is that a significant portion of developer toil can be automated safely and effectively. By strategically keeping a human in the loop for final approval via pull requests, the system maintains critical guardrails for safety, compliance, and developer oversight while still achieving massive time savings. This workflow ensures that no automated code reaches production environments without explicit human validation.

This model—automating the tedious tasks of analysis and code generation while mandating human review—provides a practical and scalable blueprint for applying AI in DevOps. It enhances productivity without sacrificing control or introducing unacceptable levels of risk. This balance offers a powerful strategy for engineering teams looking to eliminate repetitive tasks and accelerate their development cycles in a responsible manner.

Reflection and Future Directions

Reflection

Several key lessons were learned during the system’s development. Initially, sending the wrong portion of the build logs to the AI resulted in poor diagnostic accuracy; focusing on the last 5000 characters of the log, where errors typically reside, dramatically increased accuracy from 60% to 95%. Prioritizing the deterministic pattern detection engine over an AI-for-everything approach proved critical for optimizing both performance and cost-efficiency, as most common errors did not require advanced analysis.

The most crucial insight, however, was the implementation of a strict safety rule: never auto-generate code to fix syntax errors, regardless of the confidence score. This single decision eliminated all potential breaking changes during testing and underscored the importance of contextual understanding that AI cannot yet fully replicate. The system is currently limited to Terraform and single-file changes and has only been tested within non-production environments.

Future Directions

Future work will concentrate on expanding the system’s capabilities to cover a broader spectrum of DevOps challenges. The immediate roadmap includes adding support for other critical technologies, such as Python, Docker, and Kubernetes, to address a wider range of pipeline failures beyond infrastructure-as-code. This expansion will allow the system to become a more versatile tool within a modern CI/CD pipeline.

Further plans involve exploring more advanced features, such as the ability to orchestrate multi-file code changes for more complex remediation scenarios. An automatic rollback mechanism is also under consideration, which would revert a fix if it is found to cause a new, subsequent failure. Additionally, future research will involve tracking pull request merge rates to dynamically adjust the system’s confidence thresholds, allowing it to learn and optimize its decision-making over time based on real-world feedback.

A New Paradigm for DevOps Human in the Loop Automation

This project successfully demonstrated that a hybrid approach, which combines deterministic pattern matching with probabilistic AI, can drastically reduce the time spent fixing common pipeline failures. By automating the tedious work of log analysis and preliminary code writing, the system achieved a 93% reduction in resolution time while preserving essential human oversight for the final approval stage. This human-in-the-loop model strikes a critical balance between the velocity of automation and the safety of manual review.

The findings confirm that such a system offers a powerful and practical strategy for eliminating engineering toil and accelerating development cycles without compromising on quality or control. It automates the repetitive, predictable tasks that consume valuable developer time, freeing engineers to focus on more complex, creative, and impactful work. This method represents a significant step toward more intelligent and efficient DevOps practices.