In high-stakes professional domains such as immigration law, regulatory compliance, and healthcare, unchecked language generation from artificial intelligence is not a harmless bug but a significant and costly liability. A single fabricated citation in a visa evaluation can derail a critical application, triggering months of appeals and creating immense distress, while a hallucinated clause in a corporate compliance report can lead to severe financial penalties and reputational damage. Similarly, an invented reference within a clinical review document could directly jeopardize patient safety by providing misleading information. The core issue is not that Large Language Models (LLMs) are fundamentally broken, but that they are inherently unaccountable in their standard form. While techniques like Retrieval-Augmented Generation (RAG) have been introduced to ground responses in factual data, conventional RAG systems remain brittle; retrieval mechanisms can easily miss critical evidence, and very few pipelines take the necessary step to verify whether generated statements are genuinely supported by the retrieved text. Furthermore, the confidence scores produced by these models are often uncalibrated and misleading, creating a false sense of security. For engineers and developers building applications where trust is paramount, a simple “chatbot with context” is far from sufficient. What is required are robust methodologies that meticulously verify every claim, clearly signal uncertainty when evidence is lacking, and seamlessly incorporate expert human oversight into the workflow.

1. Establishing a Foundation of Trust Through Hybrid Architecture

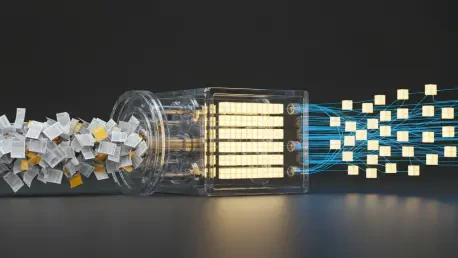

A new framework built with Django, FAISS, and various open-source NLP stacks moves beyond conventional approaches by creating a pipeline that transforms LLMs from creative storytellers into auditable and reliable assistants. This system is engineered around a core principle of verifiability, integrating several key components to ensure outputs are consistently grounded in evidence. It employs a dual-track retrieval system, JSON-enforced outputs to maintain structural integrity, automated claim verification to check for logical consistency, confidence calibration to provide more accurate uncertainty metrics, and a dedicated interface for human oversight. This hybrid architecture is designed specifically for environments where every detail matters. The goal is not simply to augment a language model with external documents but to construct a comprehensive, end-to-end system where each stage is designed to enforce accuracy and accountability. By constraining the generative process and validating its outputs against source material, the framework provides a transparent and dependable tool for professionals who cannot afford the risks associated with unverified AI-generated content. This systematic approach ensures that the final output is not an improvisation but a deterministic and justifiable synthesis of information.

The initial stage of this verifiable pipeline, ingestion and chunking, addresses the complex reality of high-stakes document review, which often involves messy and heterogeneous corpora. These collections can include scanned PDFs with OCR errors, structured Word documents, dynamic HTML guidelines, and simple plain-text notes, each presenting unique challenges. Common pitfalls during this phase include the flattening or distortion of critical structural elements like headers, tables, and references, which can strip away vital context. Furthermore, Unicode quirks such as smart quotes or zero-width spaces can corrupt text embeddings, leading to poor retrieval performance downstream. A critical consideration, especially in domains like healthcare and immigration, is the stringent requirement to redact Personally Identifiable Information (PII) to maintain compliance with privacy regulations. The framework’s ingestion pipeline is meticulously designed to mitigate these issues by first converting all documents into clean UTF-8 text. It then preserves structural elements by, for example, converting tables into a JSON format rather than flattening them into unreadable text. Finally, the content is split into 400–800-token windows with approximately 15% overlap to maintain contextual continuity, ensuring that downstream retrieval processes are working with safe, structured, and clean data from the outset.

2. Optimizing Information Retrieval and Generation

Recognizing that no single retrieval method is universally effective, the framework implements a hybrid retrieval engine that combines the strengths of different techniques. Sparse retrieval methods like BM25 excel at keyword precision, making them highly effective for queries where specific terms are crucial. In contrast, dense embeddings are more robust to paraphrasing and semantic variation, allowing them to understand the underlying meaning and context of a query even when exact keywords are not present. The system merges these two approaches using a hybrid scoring function, where a tunable parameter, alpha, balances the influence of BM25’s keyword-based score and the dense vector similarity score. This ensures a more comprehensive and relevant set of initial search results. To further enhance the quality of the retrieved information, the documents are re-ranked using Maximal Marginal Relevance (MMR). This secondary step is designed to improve the diversity of the results and reduce redundancy, preventing the context provided to the LLM from being filled with multiple documents that all state the same information in slightly different ways. The underlying infrastructure for this engine utilizes Elasticsearch for fast and scalable BM25 retrieval and FAISS for efficient dense vector search with E5-base embeddings.

Once relevant context has been retrieved, the generation phase must be structured to be auditable rather than improvisational. The framework enforces this discipline by constraining the LLM’s outputs with a predefined JSON schema, which dictates that the model must generate specific fields such as claims, citations, potential risks, and confidence scores. This structured format ensures that the output is predictable and machine-readable, facilitating automated downstream processing and verification. A key enforcement rule requires the model to provide explicit citation IDs, such as [C1], linking every generated claim directly back to a specific piece of retrieved evidence. This creates a clear and traceable lineage for every piece of information. To manage situations where the retrieved documents do not contain the necessary information to answer a query, the model is instructed to use a [MISSING] marker. This explicitly signals an information gap rather than tempting the model to hallucinate an answer, which is a critical feature for maintaining trust and transparency. This deterministic approach fundamentally shifts the role of the LLM from a freeform text generator to a structured information extractor, ensuring that every output is grounded and verifiable.

3. Verifying Claims and Calibrating Confidence

The presence of a citation alone does not guarantee that a generated claim is accurate, as the LLM may still misinterpret or contradict the evidence it cites. For instance, a claim might state, “The candidate has indefinite leave to remain,” while the cited evidence reads, “The candidate holds a temporary Tier-2 visa.” Although both statements reference the same document, the claim is a direct contradiction of the evidence. To address this critical failure mode, the framework incorporates an automated entailment checking step using a RoBERTa-MNLI model. This natural language inference model is trained to determine the logical relationship between two pieces of text: entailment, contradiction, or neutrality. For each generated claim, the system calculates an entailment score against its corresponding cited evidence. Claims that fall below a predefined confidence threshold, such as 0.5, are automatically flagged for mandatory human review. This automated verification layer acts as a crucial safety check, catching subtle but significant errors that could otherwise go unnoticed, thereby adding a robust layer of logical consistency to the entire pipeline.

A well-known issue with modern neural networks, including large language models, is that their raw probability outputs, often derived from a softmax function, are notoriously overconfident. A model might assign a 99% probability to a claim that is only marginally supported by the evidence, making its confidence scores unreliable for decision-making. To counteract this, the framework implements a post-processing step known as confidence calibration. Using a technique called temperature scaling, the system adjusts the model’s outputs to better reflect the true likelihood of correctness. This is achieved by learning an optimal temperature parameter, T, on a validation set of data, which is then used to rescale the softmax outputs. By minimizing the log-likelihood loss on this validation set, the scaling factor corrects the model’s inherent overconfidence. In practice, this calibration process has proven highly effective, with metrics like the Expected Calibration Error (ECE) dropping significantly, in one case from 0.079 to 0.042. This nearly 47% reduction means that when the calibrated system reports 80% confidence in a claim, that claim is indeed correct approximately 80% of the time, making the model’s uncertainty a far more reliable signal for human reviewers.

4. Measuring Real World Impact and Human Oversight

While automation is essential for reducing toil and increasing efficiency, human judgment remains the ultimate arbiter of legitimacy in high-stakes environments. The framework is built around this principle, featuring a sophisticated human oversight interface that facilitates seamless collaboration between the AI and human experts. A dashboard, developed using Django and htmx, presents generated claims alongside their corresponding evidence pairs in a clear and intuitive manner. This allows experts to quickly assess the validity of each claim and accept, reject, or edit the generated text directly within the interface. To ensure consistency and resolve disputes, the system incorporates a dual-review model, where contentious cases can be flagged for arbitration if the initial reviewers disagree. Critically, every action taken by a human expert—be it an acceptance, a rejection, or an edit—is recorded in immutable audit logs. Each log entry is timestamped and cryptographically signed with a SHA-256 hash, creating a permanent and tamper-proof record of the decision-making process. This robust audit trail ensures full accountability and transparency, which is a non-negotiable requirement in regulated fields. The high inter-rater reliability further validates the effectiveness of the interface in producing consistent expert judgments.

The tangible impact of implementing this hybrid stack is demonstrated by significant improvements across multiple key performance metrics when compared to a baseline RAG system. The system achieved a 23% increase in groundedness, ensuring that a higher proportion of claims were directly supported by evidence. Concurrently, hallucinated or fabricated claims were reduced by a remarkable 41%, substantially mitigating the risk of misinformation. The confidence calibration process proved its worth by decreasing the Expected Calibration Error by 47%, making the model’s reported confidence scores far more reliable. These technical gains translated directly into higher trust from human users, as evidenced by a 17% increase in the expert acceptance rate of AI-generated claims. Perhaps most impressively, the framework delivered a 43% reduction in the average time required for case review, decreasing it from 5.1 hours to just 2.9 hours. Scaled across a high-volume operation, such as an agency processing 10,000 visa cases per year, this time saving is equivalent to recovering 20,000 expert hours annually, which is roughly the capacity of ten full-time employees. This demonstrates that investing in verifiability and trust not only reduces risk but also yields substantial operational efficiencies.

5. Deployment Architecture and Future Development

The framework is built on a modern, scalable technology stack designed for robust enterprise deployment. The core application logic is handled by Django 4.2 and Django REST Framework, providing a solid foundation for building APIs and web interfaces. Asynchronous tasks, such as document ingestion and model inference, are managed by a Celery queue backed by Redis, ensuring that the system remains responsive even under heavy load. The hybrid search functionality is powered by a combination of Elasticsearch for BM25 retrieval and FAISS for high-speed vector similarity search. For the generative component, the system is flexible and can be configured to use either a powerful proprietary model like GPT-4 or a high-performance open-source alternative such as Mixtral-8x7B. The crucial claim verification step is handled by a fine-tuned RoBERTa-MNLI model. The entire architecture is designed to be containerized with Docker and orchestrated with Kubernetes, allowing it to scale horizontally to handle millions of document chunks while managing resources efficiently. Each component is chosen to contribute to a reliable, scalable, and maintainable system capable of meeting the demands of high-stakes, real-world applications.

Even a well-designed system will encounter failure modes, which should be treated as valuable design signals rather than mere bugs. For example, the retrieval system may struggle with niche policy language or specialized jargon, leading to information gaps. The mitigation for this is to move beyond generic datasets and curate a specialized corpus that accurately reflects the domain’s terminology. Another potential issue is the failure of the natural language inference model on very long claims that exceed its token limit; the solution here is to implement a pre-processing step that chunks longer claims into smaller, more manageable segments before verification. Finally, any AI system is susceptible to inheriting biases present in its training corpus, which can lead to biased outputs. This risk is managed through a combination of continuous human arbitration to catch and correct biased generations and regular refresh cycles for the underlying data to ensure it remains current and representative. Looking ahead, future work on the framework includes expanding its capabilities with multilingual retrieval using LASER 3 embeddings to support over 50 languages, developing a template synthesis feature to automatically generate follow-up questions for [MISSING] information slots, and creating a federated deployment model that allows on-premise embeddings with shared gradient updates for enhanced privacy and collaboration.

6. A New Standard for Credible Collaboration

It was concluded that developers operating in regulated, high-trust environments had to move beyond the limitations of raw LLMs. The adoption of a hybrid architecture—one that meticulously combined dual-track retrieval, strict schema enforcement, entailment verification, confidence calibration, and continuous human oversight—produced systems that were demonstrably more efficient, reliable, and auditable. This blueprint was not confined to a single application like visa assessments; its principles proved broadly applicable to other critical domains, including healthcare audits, regulatory compliance checks, and the validation of scientific literature. The core takeaway from this work was that grounding should not be an afterthought but a central architectural principle in the design of AI systems. By engineering pipelines with verifiability at their core, it was possible to transform inherently probabilistic and sometimes unreliable language models into credible and trustworthy collaborators for professionals in the most demanding fields. The result was a system designed not for novelty or entertainment, but for trust.