I’m thrilled to sit down with Vijay Raina, a renowned expert in enterprise SaaS technology and software architecture. With his deep knowledge of modern data systems and thought leadership in design, Vijay is the perfect person to guide us through the intricacies of data quality management, particularly in the context of cutting-edge tools like Databricks’ Delta Live Tables. Today, we’ll explore how Delta Expectations are revolutionizing data reliability, the architectural shifts they bring to data engineering, and practical strategies for implementing robust data quality practices in high-throughput environments.

How do data quality failures sneak into systems, and why do they pose such a significant challenge for organizations?

Data quality failures often creep in silently through small errors like malformed timestamps or illogical values, such as negative revenue figures. These issues might seem minor at first, but they compound over time. Before you know it, a quarterly report shows impossible numbers, or an ML model starts producing garbage output. The challenge for organizations is twofold: first, these errors aren’t immediately obvious, and second, they can propagate downstream, affecting everything from analytics to decision-making. The hidden cost is also huge—beyond the financial hit, which studies estimate at millions annually, there’s the engineering time lost to firefighting these issues instead of building new capabilities.

What makes write-time validation a game-changer compared to traditional data validation approaches?

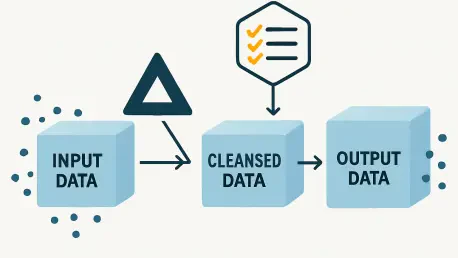

Traditional validation happens after data is written to storage. You extract, transform, load, and then check for issues using tools like Great Expectations. By then, bad data has already landed in your system, potentially polluting storage and being consumed by downstream processes. Write-time validation, as enabled by Delta Expectations in Delta Live Tables, flips this on its head. It embeds validation into the write transaction itself, so issues are caught before data is persisted. This proactive approach prevents invalid data from ever hitting your lakehouse, reducing storage pollution and stopping downstream propagation. It’s a shift from reacting to problems to enforcing data quality as a contract upfront.

Can you walk us through how Delta Expectations operate within a data pipeline?

Delta Expectations are a feature of the Delta Live Tables framework and leverage Spark’s lazy evaluation along with Delta Lake’s atomicity guarantees. When you define an expectation—say, ensuring a price column is greater than zero—it’s injected as a filter into Spark’s logical plan. During execution, Spark evaluates this predicate for each record as part of the transformation process. Depending on the mode you’ve set, failed records can either abort the entire write in FAIL mode, be filtered out in DROP mode, or be logged as violations in WARN mode while still proceeding to write. The key is that this validation happens on data in motion—within DataFrames—before anything is committed to a Delta table, ensuring only valid data gets persisted.

How does the Bronze-Silver-Gold medallion architecture complement Delta Expectations in managing data quality?

The Bronze-Silver-Gold architecture is a layered approach to data processing that aligns beautifully with Delta Expectations. In the Bronze layer, you ingest raw data with minimal transformation, preserving source fidelity for audits or replays. Here, you might apply basic expectations to log issues without dropping data. As you move to the Silver layer, expectations become stricter, ensuring cleaned and validated data for downstream consumers. Invalid records can be quarantined into separate tables for analysis. Finally, the Gold layer contains fully curated, business-ready data. Delta Expectations at each stage enforce quality rules, while the architecture provides a structured way to handle bad data without losing it, enabling debugging and continuous improvement.

What are some key considerations when using Delta Expectations in a streaming data environment?

Streaming data introduces unique challenges, like late-arriving data and the need for watermarks. With Delta Expectations in a streaming context, the validation happens after Spark Structured Streaming applies the watermark, which drops events older than a specified threshold. Only the remaining timely records are then evaluated against your expectations. This layered filtering ensures that only valid and relevant data gets written to your Delta tables. However, you need to carefully coordinate watermarks with expectation logic to avoid unintended data loss, and you should monitor latency to ensure validation doesn’t bottleneck your streaming pipeline.

How can Delta Expectations impact the performance of a data pipeline, and what strategies can mitigate any overhead?

Delta Expectations do add some overhead since each expectation introduces a predicate evaluation into the Spark execution plan. The impact depends on factors like the complexity of the predicate—simple comparisons are cheap, while regex or user-defined functions can be costly—and the volume of data being processed. To mitigate this, you can order DROP expectations early in the pipeline to filter out invalid records before further processing. Combining related validations into a single predicate also helps. Additionally, avoid heavy user-defined functions and opt for vectorized SQL expressions when possible. Testing with your specific workload and SLAs is crucial to strike the right balance between quality enforcement and performance.

What’s your forecast for the future of data quality tools like Delta Expectations in modern data architectures?

I see Delta Expectations evolving into a cornerstone of automated data contracts within modern architectures. We’re likely to see advancements like auto-generated expectations based on historical data profiles, which would reduce manual configuration. Contract versioning tied to pipeline and schema releases will become standard, ensuring consistency as systems evolve. I also anticipate organization-wide contract publishing to support data mesh paradigms, where domains can define and share quality standards. Ultimately, integrating these tools with schema registries for full lifecycle coverage will make data reliability a seamless, built-in guarantee rather than an afterthought.