The most sophisticated artificial intelligence model, trained on petabytes of historical data and validated with near-perfect accuracy, often begins to fail the moment it meets the unpredictable reality of a live user. This quiet degradation of performance is a widespread challenge in the mobile application landscape, turning promising AI features into sources of user frustration. The core issue is not a flaw in the initial model but a fundamental disconnect between its static knowledge and the dynamic world it inhabits. To overcome this, development must evolve beyond building isolated models and toward engineering resilient, adaptive systems that learn continuously from every user interaction.

Why Do Good AI Models Go Bad After Launch

The pervasive problem of post-launch performance decay plagues many mobile AI implementations. A recommendation engine that once delighted users with relevant suggestions may start promoting irrelevant content, or a personalization feature that streamlined workflows can become an obstacle. This phenomenon stems from the fact that a deployed model is a snapshot in time, trained on a historical dataset that represents a past version of reality. Once launched, it encounters a constantly changing environment where it cannot adapt.

This silent misalignment is known as model drift. It occurs as the statistical properties of the live data diverge from the data the model was trained on. User behaviors shift, new product features are introduced, and external trends alter engagement patterns. A static model, blind to these changes, inevitably grows stale. Its predictions become less accurate, its utility diminishes, and the user experience suffers as a consequence of this growing gap between its programming and the present reality.

Therefore, the strategic focus must pivot from the unattainable goal of building a single “perfect” model to the more practical objective of creating a resilient, learning system. The challenge is no longer just about machine learning; it is about systems engineering. Success depends on designing an architecture that anticipates change and is equipped with the mechanisms to detect drift, gather new ground truth data, and automatically retrain and redeploy updated models to stay in sync with its users.

The Vicious Cycle From Fire and Forget to a Continuous Feedback Loop

The traditional AI development pipeline is often a one-way street, a “fire-and-forget” process that inherently leads to model drift. In this model, engineers collect a large historical dataset, use it to train and validate a model offline, and then embed that static artifact into the application package. Once deployed, the model operates in isolation, with no formal mechanism to receive feedback on its real-world performance or to learn from the outcomes of its predictions.

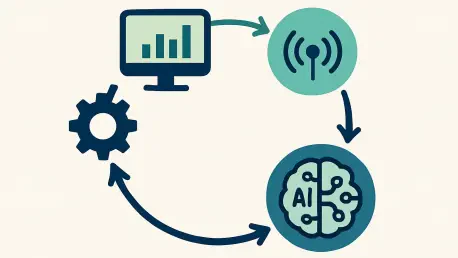

The necessary paradigm shift involves transforming this linear process into a continuous, closed-loop system. This is achieved by embracing a telemetry-driven architecture that turns the one-way street into a cyclical feedback loop. In this new model, the system is designed not only to serve predictions but also to meticulously capture the user’s reaction to them. The live production environment becomes the primary source of new training data, enabling the system to learn and improve in perpetuity.

At the heart of this transformation is the elevation of telemetry to a first-class citizen in the development lifecycle. It is no longer a secondary concern for general analytics but the primary driver of model intelligence. This means systematically logging every model prediction alongside its context and, just as importantly, logging the corresponding user-validated outcomes. Actions such as clicks, dismissals, purchases, or shares become the ground truth signals that fuel the entire learning cycle, providing the data needed to measure, diagnose, and improve the AI’s performance over time.

The Anatomy of a Telemetry Driven AI System A Six Layer Architecture

This intelligent system is built upon a cohesive, six-layer architecture that manages the flow of information from user interaction back to model deployment. The cycle originates at the UX and Interaction Layer within the mobile application, where user intent is captured through taps, scrolls, and other engagements with AI-driven content. Immediately adjacent is the Telemetry Layer, a specialized in-app SDK that does more than just log events. It enforces a strict data schema, intelligently batches events to preserve device performance, and applies privacy filters at the source to protect user information.

Once captured, this data embarks on its journey through the Transport and Ingestion Layer, a secure bridge moving event batches from the client to the backend. Here, high-throughput streaming systems like Kafka or Google Pub/Sub ingest the data in real time, making it available for immediate processing while also archiving it in a data lake for historical analysis. This feeds into the Feature and Label Pipelines, the true heart of the learning loop. In this layer, backend jobs process the raw event streams, joining prediction events with their corresponding outcome events to construct accurate labels and derive new features that describe user behavior and context.

With newly labeled data available, the process moves to the Training, Evaluation, and Monitoring Layer. This is the domain of machine learning practitioners, who use automated systems to train new model versions and continuously monitor for performance regressions, data drift, or statistical bias. Finally, the Serving and Model Delivery Layer closes the loop. Newly trained and validated models are delivered back to the application, either for on-device inference or through cloud APIs. This delivery is managed dynamically with remote configuration and feature flags, enabling controlled rollouts and A/B testing to ensure that each update genuinely improves the user experience.

A Practical Walkthrough Powering a Personalized Content Feed

To see this architecture in practice, consider its application to a personalized “For You” content feed. When a user navigates to this screen, the application generates and logs a PredictionEvent. This event contains a rich payload of information, including the list of recommended content IDs that were shown, the version of the model that generated the recommendations, and a unique requestId to tie the prediction to subsequent user actions.

As the user interacts with the feed, the system captures their feedback by logging corresponding OutcomeEvents. If the user taps on a recommended article, an outcome event is logged with the action clicked. If they scroll past other items without engaging, those are implicitly labeled as ignored. A user might also explicitly dismiss a piece of content, triggering a dismissed event. Each of these outcome events includes the same requestId that was generated with the initial prediction, creating an unbreakable link between the model’s output and the user’s reaction.

This linkage is what powers the entire learning cycle. On the backend, an automated job regularly processes these events, joining the PredictionEvents with their associated OutcomeEvents using the shared requestId. This process creates a clean, structured training dataset. From this joined data, engineers can calculate precise offline metrics like click-through rates and prepare perfectly labeled examples—clicked, ignored, or dismissed—to feed into the next model training cycle, ensuring the next version of the model is more attuned to user preferences.

Essential Principles for a Robust and Trustworthy System

The value of this telemetry extends far beyond model training; it is also fundamental to operational observability. An AI feature should be treated as a complex distributed system that requires rigorous, real-time monitoring. This monitoring falls into three crucial categories: UX metrics, such as session length and conversion rates, which measure the feature’s impact on user behavior; model metrics, like score distributions and performance across different user segments, which assess the model’s health; and system metrics, including latency and error rates, which track the operational stability of the infrastructure.

To make this monitoring effective, every prediction must be tagged with rich metadata. Including a modelVersion and experimentId with every logged event is not optional but essential for rapid diagnosis and debugging. When a performance metric suddenly drops, this metadata allows teams to immediately pinpoint whether the issue is isolated to a new model version, a specific A/B test group, or users on a particular device type or network condition. This level of granularity transforms debugging from a search for a needle in a haystack into a precise, data-driven investigation.

Finally, a modern AI system must be built with trust at its core by integrating privacy and compliance by design. This requires moving from a reactive stance on data protection to a proactive one where safeguards are engineered into the architecture from the beginning. Actionable guardrails are critical for building a compliant and trustworthy system that legal and security teams can confidently endorse.

These guardrails include several non-negotiable practices. User identifiers must be pseudonymized, often using stable hashes, to protect personally identifiable information. Strict rules must be established to prevent the logging of sensitive raw content, such as user-generated text. All data collection must strictly adhere to the user’s consent status, and clear, enforceable data retention policies should be implemented to automatically purge data after a defined period. By embedding these principles into the system’s foundation, organizations can build powerful AI that respects user privacy.

The architecture outlined in this analysis provided a comprehensive blueprint for this new generation of mobile AI. It represented a move away from static, brittle models and toward dynamic, resilient systems that thrive on feedback. The principles discussed offered a pathway to create features that not only launched successfully but also grew more intelligent and useful over time. This telemetry-driven approach established a sustainable and scalable framework, ensuring that AI systems could adapt in tandem with the users they were designed to serve.