The seemingly straightforward decision to migrate a mission-critical system to the cloud can quietly introduce catastrophic risks that are only discovered after millions of dollars in transactions have been processed incorrectly. A “lift and shift” is often presented as a simple change of scenery for an application, moving it from on-premises servers to a cloud provider. However, this perspective dangerously underestimates the subtle environmental differences that can alter calculations, corrupt data, and disrupt services. The fundamental challenge is not just moving the application but guaranteeing that its behavior remains identical across thousands of complex business scenarios, a task for which traditional testing methods are ill-equipped.

How a Simple Lift and Shift Can Jeopardize Millions in Transactions

The stakes are highest when dealing with large-scale administrative systems, such as those processing tax returns or unemployment insurance claims. In these environments, even a one-cent rounding error, when multiplied across millions of transactions, can lead to significant financial discrepancies and legal liabilities. The hidden fallacy of a simple migration is the assumption that identical code will produce identical results in a different environment. Differences in operating system patches, library versions, or even CPU architecture can introduce subtle changes in application behavior that are nearly impossible to predict.

This reality introduces the core challenge of any high-stakes migration: achieving functional parity. The objective is to prove, with verifiable certainty, that the new cloud-based system produces the exact same outputs as the legacy on-premises system given the same inputs. This guarantee must extend across every function, from simple data lookups to complex, multi-step batch processes that have been accumulating business logic for decades. The sheer scale and complexity make comprehensive manual validation an impractical and unreliable approach.

The Paradox of Legacy Systems Too Big to Test Too Critical to Fail

Legacy administrative systems are defined by their immense scale and intricacy. They often contain decades of accumulated business rules, reflecting years of legislative changes and evolving organizational policies. These systems handle massive daily transaction volumes and are entangled in a web of data dependencies that are frequently poorly documented. The logic governing a single transaction may traverse hundreds of lines of code and interact with dozens of database tables, creating a combinatorial explosion of potential execution paths that must be validated.

Traditional testing methodologies buckle under this weight. The manual creation of test cases to cover every possible permutation of business logic and data input is an impossible task, limited by time, resources, and human imagination. Furthermore, unit tests, while valuable for verifying isolated code components, are often blind to infrastructure-related regressions. A function might pass all its unit tests but fail in the new environment due to a latent dependency on a specific network configuration or storage performance characteristic that has changed during the migration.

The Solution A Traffic Replay Architecture

A more effective approach is to treat the system as a “black box,” focusing on validating its behavior rather than its internal code. The central concept of this new pattern is a traffic replay architecture, which tests the holistic input-to-output transformation of the system. Instead of writing synthetic test cases, this method uses the system’s own production workload as the ultimate, real-world test suite, ensuring that the validation covers the scenarios that matter most.

This “Current-vs-New” paradigm operates by capturing a live production workload and replaying it against the migrated cloud environment. The results are then compared with the original production outcomes to detect any discrepancies. This approach was recently applied to the migration of a massive employment insurance system, a project involving 400 distinct functions, over 1,000 database tables, and a daily processing volume of 900,000 transactions. By leveraging real data, the testing process automatically covered obscure edge cases that no manual quality assurance process could ever anticipate.

Deconstructing the Pattern How It Works in Practice

The implementation of this architecture follows a three-step process. The first step is the capture and sanitization of production artifacts. This involves collecting three essential components for each transaction: the raw input data (such as a batch file or request payload), the state of the database before the process runs, and the resulting output data after the process completes. A critical element of this stage is a robust PII masking pipeline, which anonymizes all personally identifiable information to ensure that sensitive data never leaves the production environment, maintaining compliance with privacy regulations.

Next, a replay engine standardizes the execution of these captured workloads. A key innovation in this pattern is treating legacy batch processes as if they were modern HTTP request-response interactions. By creating a universal testing harness that can “replay” the sanitized inputs against the new cloud environment, teams can use a single, consistent tool to test all 400 functions. This eliminates the need for creating and maintaining hundreds of bespoke testing scripts, dramatically simplifying the testing infrastructure.

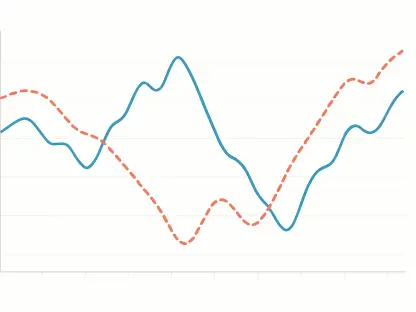

The final step is automated validation using a sophisticated “diff” engine. This engine moves beyond checking for simple success or failure codes and performs a deep inspection of the resulting data. The validation rests on three pillars of comparison: binary file diffs to ensure output files are identical, bit-for-bit; database row diffs to verify that all data modifications are exactly the same; and performance diffs to flag any transactions that take significantly longer to execute in the cloud environment, which could indicate underlying infrastructure issues.

Overcoming the Final Hurdle Taming Non-Deterministic Data

A significant challenge in automated comparison is managing non-deterministic data, which can create validation “noise” by flagging differences that are not actual bugs. Common sources of this noise include timestamps, which will naturally differ between two separate runs, and auto-incrementing sequence IDs or session keys, which are expected to change. If not handled properly, these benign discrepancies can overwhelm the validation process with false positives, rendering it useless.

The solution is a schema-aware diff engine. This tool can be configured with specific rules to intelligently ignore expected variations in certain fields while maintaining strict validation on business-critical ones. For instance, the engine can be instructed to overlook changes in an updated_at column but immediately flag any difference, no matter how small, in a payment_amount or tax_rate field. This intelligence allows the system to distinguish between harmless environmental artifacts and genuine, high-impact regressions.

The Results From Months of Manual Effort to Weeks of Automated Validation

The implementation of this standardized testing pattern delivered radical gains in both velocity and coverage. The engineering team successfully validated all 400 functions and 1,000 tables in just two weeks, a task that was previously estimated to require several months of intensive manual effort. More importantly, by using actual production data, the testing automatically covered complex and obscure edge cases that would have been impossible for any quality assurance engineer to predict or replicate manually.

This automated approach also provided a level of pre-launch confidence that was previously unattainable. The performance diffing capability identified several infrastructure bottlenecks, such as suboptimal database indexing and network latency issues, allowing them to be resolved before the system went live and impacted users. The validation process shifted from an exercise in approximation and educated guessing to one of mathematical certainty, providing concrete proof that the migrated system would perform identically to its predecessor. This systematic validation confirmed that the project could proceed with go-live, backed by empirical data rather than hope.