In the intricate world of large-scale data processing, the most formidable obstacle often emerges not during complex model training but in the seemingly straightforward task of writing the final output to storage. The journey of transforming raw data into actionable insights can abruptly halt at this final step, where massive I/O challenges introduce unexpected bottlenecks that can derail entire production pipelines, turning a routine operation into a high-stakes engineering puzzle. This scenario is particularly acute when dealing with sparse data structures, which, while memory-efficient for computation, present unique and often insurmountable hurdles during serialization and writing, pushing even robust distributed systems to their limits.

The Hidden Bottleneck When Saving Data Is Harder Than Training

Within many machine learning workflows, a critical yet frequently underestimated challenge lies in the input/output (I/O) operations that bookend the core logic. While significant engineering effort is dedicated to optimizing model performance and training speed, the process of saving the resulting data can become a primary bottleneck. This is especially true for outputs like the high-dimensional feature sets generated by a CountVectorizer, which often take the form of multi-gigabyte sparse DataFrames. These structures are designed for computational efficiency but can trigger catastrophic failures when written to disk using standard methods.

The problem escalates when legacy systems impose strict format requirements, such as mandating a raw CSV file. This constraint forces the materialization of a memory-efficient sparse object into a dense, text-based format, a process that is notoriously resource-intensive. The resulting I/O demand can easily overwhelm system memory and storage bandwidth, causing prolonged execution times or, more commonly, out-of-memory (OOM) errors that terminate the process entirely. Consequently, what appears to be a simple “save” command evolves into a complex performance engineering task.

The High-Stakes Challenge of Juggling Speed, Scale, and Order

The real-world complexity of this I/O problem is defined by a set of non-negotiable requirements that elevate the stakes far beyond a simple file write. In production environments, writing data to a destination like Amazon S3 is governed by strict service-level agreements (SLAs) that demand both speed and reliability. Leveraging parallel processing is not merely an optimization but a necessity to ensure that data pipelines complete within their allotted timeframes, preventing cascading delays in downstream processes.

Furthermore, the integrity of the data output often depends on preserving the exact sequence of records. Maintaining a deterministic row order is crucial for reproducibility and for ensuring that data can be correctly joined with other datasets or appended to existing tables without introducing inconsistencies. This mandate for order means that any parallelization strategy must be carefully orchestrated to avoid shuffling rows, adding a significant layer of complexity to the solution design. These combined constraints—speed, scale, and strict ordering—create a formidable technical challenge that standard tools are ill-equipped to handle.

A Tour of Common Solutions and Why They Fail at Scale

Initial attempts to solve this problem naturally gravitate toward conventional, high-level frameworks. A direct .to_csv() call on a massive sparse DataFrame is the most straightforward approach, but it almost inevitably leads to an OOM error as the system attempts to materialize the entire dense representation in memory. This method, while simple, fails to scale beyond moderately sized datasets, quickly becoming a dead end in enterprise-level applications.

Turning to distributed computing frameworks like Dask or Spark seems like the logical next step. However, this path is fraught with its own set of pitfalls. High-level abstractions in these ecosystems often lack native support for the Pandas sparse dtype, forcing an implicit and memory-explosive conversion to a dense format behind the scenes. This conversion negates the primary benefit of using a sparse structure in the first place. The massive serialization overhead required to move these newly densified objects between workers further undermines performance, often resulting in sequential write behavior despite the distributed architecture.

An Engineer’s Breakthrough with RDD-Level Orchestration

The turning point in solving this persistent issue came from a fundamental shift in perspective. Instead of forcing distributed frameworks to natively understand and process the sparse DataFrame, a more effective strategy involves using the framework purely as an orchestrator for the write operations. This approach recognizes the limitations of high-level abstractions and leverages the low-level power of the distributed engine to manage workload distribution without becoming entangled in data-type incompatibilities.

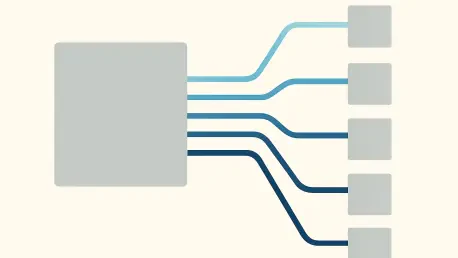

The core insight is to keep the large sparse DataFrame intact within the memory of a single, sufficiently provisioned worker node. Rather than attempting to partition and distribute the data itself—a process that triggers the problematic serialization and densification—the system distributes the instructions to write specific portions of the data. By treating the DataFrame as a static asset and parallelizing the I/O tasks that read from it, the memory explosion is completely avoided. This strategy transforms the problem from one of data manipulation to one of task parallelization, allowing Spark’s Resilient Distributed Dataset (RDD) API to shine.

The Blueprint for Success Through Chunked Parallel Writes

The implementation of this strategy follows a clear, step-by-step blueprint. First, the massive sparse DataFrame is logically partitioned into smaller, fixed-length row chunks. The size of each chunk is a configurable parameter, selected to ensure that processing a single unit remains well within the memory capacity of an individual executor. This chunking process creates manageable and independent units of work that are perfectly suited for parallel execution.

Next, each chunk is treated as a self-contained write operation, destined for its own separate CSV file in a designated output directory on S3. This isolation guarantees a predictable memory footprint for each task and prevents interference between concurrent writes. Finally, Spark’s rdd.parallelize() function is used to distribute these independent write operations across the cluster’s executors. Because the executors are only handling the I/O for a small, pre-defined chunk, they operate with maximum efficiency, achieving true parallelism without the risk of memory exhaustion or serialization bottlenecks.

Quantified Gains and Broader Implications

The performance improvement from adopting this chunked, parallel write architecture was transformative. Operations that previously took four to six hours before ultimately crashing were completed in a matter of minutes. For example, writing a 40 GB sparse feature set saw its runtime drop dramatically, and the system demonstrated linear scalability, successfully handling workloads up to 80 GB while maintaining perfect row order. This approach effectively decoupled the total dataset size from the memory requirements of a single worker, making the solution robust and scalable.

This experience underscored a valuable lesson for data engineers working with distributed systems like AWS Glue or EMR. While high-level abstractions such as DynamicFrames or SparkSQL offer convenience, the most performant and reliable solutions sometimes require dropping down to a lower level of control. For complex I/O challenges involving specialized data structures like sparse DataFrames, leveraging the RDD API to orchestrate standard Python logic proved superior. By chunking the data and using Spark to distribute the writing workload rather than the data itself, engineers could overcome the inherent limitations of higher-level tools and build truly efficient data pipelines.