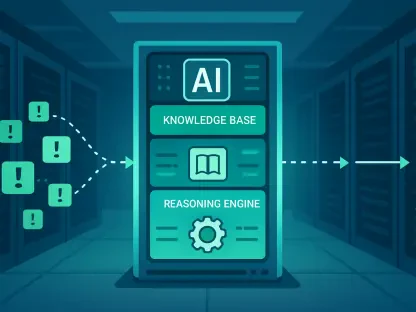

The sudden failure of a critical data pipeline often triggers a high-pressure, chaotic response that mirrors a cybersecurity incident, where stale dashboards and delayed data can have immediate and significant business consequences. For the on-call engineer, the true cost is not the simple act of retrying a job but the painstaking process of gathering context by navigating a maze of disparate tools, from CloudWatch logs and support tickets to chat channels and the Airflow UI, all while trying to determine the safest path to recovery. Most generative AI pilots in data operations have proven to be passive observers, capable of explaining a problem but lacking the ability to reliably pull operational data, correlate failures across multiple runs, or propose a secure, auditable action. This is precisely the gap that the Model Context Protocol (MCP) addresses by standardizing how an AI agent connects to various tools through a governed interface. By reframing DataOps as a structured incident response workflow, MCP empowers teams to transform manual runbook steps into a series of bounded, agent-callable tools, enabling a faster, safer, and more controlled resolution process with human approval at its core.

1. Identification and Assessment for Rapid Response

The initial phase of any incident response is detection, where the primary objective is to convert ambiguous system signals into a clearly defined, actionable incident. The process begins by recognizing that a failure has occurred and swiftly identifying the specific pipeline run that requires immediate investigation. Initially, the detection mechanism can be straightforward, using a failed Directed Acyclic Graph (DAG) run as the primary trigger. The Model Context Protocol facilitates this by providing specific tools, such as list_recent_failures and list_failed_runs, which allow an automated agent to query the system for recent issues within a predefined time window, for instance, the last 60 minutes. This programmatic approach eliminates the need for manual log monitoring or dashboard checks, allowing the response effort to be focused and immediate. As the system matures, these simple triggers can be enhanced with more sophisticated alerts based on data freshness metrics or Service Level Agreement (SLA) violations, creating a more proactive framework that identifies potential issues before they escalate into critical failures.

Once an incident has been formally identified, the next crucial step is triage, which involves rapidly assessing the severity and scope of the failure to inform the subsequent response. The goal is to answer fundamental questions—”What failed?” and “How urgent is it?”—within seconds, not minutes. MCP enables this with atomic tools like get_run_summary, which provides key metadata such as task states and start or end times, and get_failed_tasks, which pinpoints the exact components that did not complete successfully. Furthermore, a failure_frequency tool can analyze the lookback history to differentiate between a transient, one-off glitch and a persistent, recurring problem. The output from the triage phase should not be a verbose log dump but a concise, decision-friendly summary. This summary would highlight the first failing task, the number of retry attempts, and any relevant business context tags, such as tier=1 or business_critical, allowing engineers to prioritize their efforts effectively and manage stakeholder communications without being overwhelmed by extraneous details.

2. Investigation and Resolution with Governed Actions

The diagnostic phase is dedicated to uncovering the root cause of the failure, a task that traditionally involves manually combing through extensive and often cryptic log files. The primary challenge is to isolate the true error message from the surrounding noise with speed and precision. Here, MCP tools like task_log and extract_errors prove invaluable. An effectively designed task_log tool does not return the entire log file; instead, it fetches only the most recent N lines, significantly reducing data transfer costs and the cognitive burden on the engineer. Crucially, this process must be governed by strict guardrails. These include redacting sensitive information like API keys and passwords, capping the total output size to prevent data leakage, and preferably returning only the specific exception block or error signature rather than raw data payloads. This approach ensures that the diagnostic process is not only fast but also secure, providing the necessary information to understand the problem without exposing the system to unnecessary risks.

Following a successful diagnosis, the focus shifts to remediation, where the insights gained are translated into the smallest, safest recovery action possible. In this framework, remediation begins with a single, highly controlled action: triggering a DAG rerun. MWAA supports this through its CLI token workflow, allowing for programmatic execution. The MCP toolset for this stage includes propose_action, which formulates a recovery plan based on the error signature, and request_approval, which gates the execution. The trigger_dag tool is designed to execute only after receiving explicit human approval, forming a critical safety check. This action is further protected by a series of non-negotiable guardrails: a DAG allowlist ensures only specific, pre-approved pipelines can be triggered; configuration parameters are validated against a schema to prevent arbitrary inputs; environment-specific rules enforce stricter controls in production; and a comprehensive audit log records who requested the action, who approved it, and the final outcome, ensuring full accountability.

3. Confirmation and Continuous Improvement

After a remedial action has been executed, it is essential to verify that the system has returned to a healthy state rather than simply assuming the fix was successful. This verification step is a critical feedback loop that confirms recovery and prevents the system from entering a cycle of failed retries. MCP facilitates this with a suite of verification tools, including get_latest_run to confirm that a new instance has started and get_task_states to check if critical tasks within the new run have moved to a success state. A higher-level tool, check_recovery_rules, can encapsulate business logic to provide a definitive assessment of recovery. Simple but effective verification rules might include confirming the existence of a new successful run, ensuring that previously failed tasks have now succeeded, and monitoring for any new failures within a specified time window following the intervention. If these checks fail, the agent should not attempt random, unguided retries; instead, it is programmed to escalate the incident to a human operator for further analysis, preventing automated actions from worsening the situation.

The final stage of the incident response lifecycle is the postmortem, which aims to transform the lessons from a single event into long-term preventative measures. The goal is to capture the incident’s story—from detection to resolution—without resorting to copying and pasting raw, unredacted logs into a document. MCP tools like build_timeline can programmatically construct a sequence of events, while summarize_root_cause can use the error signature and contextual data to generate a concise explanation of the failure. Most importantly, a suggest_prevention_actions tool can propose concrete improvements based on the incident’s characteristics. Examples of such actions include adding a pre-check task to validate upstream data availability and permissions, implementing schema contracts to enforce data quality, refining alert routing based on DAG tiering, or formalizing a safe rerun playbook that includes mandatory approvals. A key guardrail for this stage is that all postmortem artifacts should be generated from redacted summaries, ensuring that sensitive information is never retained in long-term documentation.

A Blueprint for Resilient Data Operations

The practice of treating data pipeline failures through the structured lens of incident response provided a clear and repeatable blueprint for action: Detect, Triage, Diagnose, Remediate, Verify, and Postmortem. By leveraging the Model Context Protocol, it became feasible to connect an AI assistant to operational systems like MWAA and CloudWatch through a small, meticulously governed set of tools. This approach effectively reduced the “swivel-chair” workload of engineers without granting unsafe, unrestricted access to critical infrastructure. The strategy began with implementing read-only tools for visibility, then cautiously introduced a single, powerful write action—triggering a DAG—but placed it behind layers of approvals, allowlists, validation, and comprehensive auditing. This incremental and controlled methodology was what ultimately moved the AI from a passive conversationalist to an active, trusted partner in operating complex data pipelines with greater reliability and security.