In a groundbreaking development in computer architecture, researchers at Technion—Israel Institute of Technology have introduced a software package that promises to revolutionize the way computers process data. This innovative package, known as PyPIM (Python Processing-in-Memory), enables in-memory computing, allowing computers to perform processing operations directly within their memory units. By minimizing the need for energy- and time-consuming data transfers between the CPU and memory, this advancement significantly enhances the efficiency and speed of computing processes.

The In-Memory Computing Paradigm

The traditional computer architecture relies heavily on the CPU to execute operations on data stored in memory. This model often results in a bottleneck due to the constant data transfer between the CPU and memory. In-memory computing addresses this issue by allowing certain computations to be performed directly within the memory itself, thereby reducing the need for data transfers and enhancing overall system performance.

Reducing Data Transfer Bottlenecks

The significant bottleneck associated with traditional computing architectures is the repeated transfer of data between the CPU and memory units. This data transfer not only consumes substantial time but also considerable energy, leading to inefficiencies in both power consumption and processing speed. In-memory computing circumvents this issue by permitting computational tasks to be executed directly within the memory, thereby drastically minimizing data movement. This paradigm shift not only accelerates performance but also opens new avenues for the optimization of various computing tasks that were previously limited by the CPU-memory bottleneck.

The efficiency gains from this approach are particularly relevant in fields requiring swift and massive data processing, such as artificial intelligence and big data analytics. By enabling computation within the memory, the overall computational load on the CPU is greatly reduced, which results in smoother and faster performance for complex tasks. This technological advancement aligns with the growing need for more powerful and efficient computing solutions in today’s data-driven world. Essentially, the reduction of data transfer bottlenecks through in-memory computing revolutionizes traditional processing methods, providing a foundation for more sophisticated and capable systems.

Technological Advancements in PyPIM

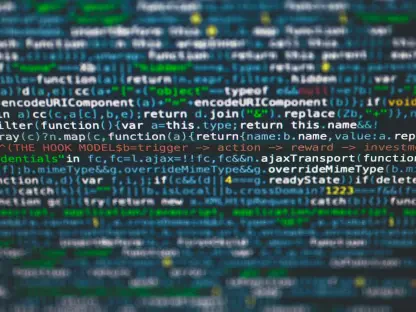

Researchers at Technion have developed an interface that translates Python commands into machine commands executable within memory. This new platform, PyPIM, provides software libraries that facilitate the development of in-memory computing applications using popular programming languages like Python. By integrating in-memory computing capabilities into existing systems, PyPIM enhances computational efficiency and performance.

The platform stands out by offering a user-friendly interface for developers, enabling them to leverage the benefits of in-memory computing without needing extensive hardware expertise. PyPIM provides a set of software libraries that are designed to work seamlessly with Python, one of the most widely used programming languages in the tech industry. This accessibility makes PyPIM a versatile tool that caters to a broad range of applications, from academic research to commercial implementations. By bridging the gap between advanced hardware capabilities and the practical demands of software development, PyPIM marks a significant milestone in the evolution of computer architecture.

Historical Context and Motivation

The Memory Wall Problem

The segregation of processing and memory functions in conventional computer architectures has long been a challenge, often referred to as the “memory wall problem.” Advances in processor speeds and memory storage capacities have exacerbated this issue, leading to significant data transfer bottlenecks. Prof. Shahar Kvatinsky and his team at Technion have focused on solving this problem by developing the PyPIM platform.

The memory wall problem arises because the speed at which processors can execute instructions has outpaced the speed at which data can be read from or written to memory. This discrepancy creates a bottleneck, limiting the overall efficiency of computational processes. Recognizing this challenge, Prof. Kvatinsky and his team have aimed to create a solution that seamlessly integrates processing capabilities within the memory itself. Their work on PyPIM represents a fundamental shift in how data processing and memory storage are handled, addressing the root cause of the memory wall problem.

Collaborative Research Efforts

The development of PyPIM exemplifies collaborative research, integrating efforts from various experts, including Ph.D. student Orian Leitersdorf and senior researcher Ronny Ronen. Their combined expertise has resulted in a platform that simplifies the process of developing software for in-memory computing, bridging the gap between new hardware capabilities and traditional programming frameworks.

Such collaboration ensures that the PyPIM platform is not only technically advanced but also practical and user-friendly. The involvement of researchers with diverse expertise has facilitated the creation of a tool that meets the needs of a broad user base. For developers, this means they can now implement in-memory computing solutions without having to overhaul their entire coding practices. This synergy between theoretical research and practical application is what makes PyPIM a groundbreaking advancement in the field of computer architecture.

Rising Interest in In-Memory Computing

Applications Across Various Fields

The in-memory computing approach is attracting significant attention in various fields, including artificial intelligence, bioinformatics, finance, and information systems. Several research groups across academia and industry are investigating this approach, focusing on memory architecture and the production of innovative memory units. The potential applications of in-memory computing are vast, promising significant advancements in these fields.

By enabling data processing within memory, in-memory computing can significantly accelerate machine learning algorithms, making real-time analytics and decision-making processes more efficient. In the field of bioinformatics, in-memory computing can expedite the analysis of large genomic datasets, leading to faster breakthroughs in personalized medicine. Similarly, in finance, this technology can enhance the speed and accuracy of high-frequency trading algorithms, providing an edge in the highly competitive market. The widespread interest and ongoing research into in-memory computing underscore its potential to transform multiple industries.

Shift in Software Development Practices

Historically, software was designed based on the classic architectural model of separate CPU and memory units. The transition to in-memory computing necessitates the development of new algorithms and software capable of utilizing this architecture. PyPIM stands as a bridge between the old and the new, allowing developers accustomed to traditional architectures to adapt to the innovative in-memory processing model without needing to overhaul their existing workflows completely.

The adoption of in-memory computing requires a rethinking of conventional coding practices and software design principles. Developers must now consider how to optimize their algorithms to take full advantage of in-memory processing capabilities. PyPIM aids this transition by providing the necessary tools and frameworks that make it easier to integrate in-memory computing into current systems. The platform’s ability to simulate the performance of code on in-memory systems allows developers to evaluate the potential benefits and make informed decisions about adopting this new technology.

Main Findings and Performance Improvements

Impact of the PyPIM Platform

PyPIM simplifies the process of developing software for in-memory computing, providing a simulation tool that allows developers to measure and predict the performance improvements of their code when executed on in-memory computing systems. This platform offers a practical solution for integrating in-memory computing capabilities into existing systems, enhancing computational efficiency and performance.

The simulation tool is particularly valuable for developers, as it enables them to test and optimize their code before deploying it on actual in-memory hardware. This not only saves time and resources but also ensures that the final implementation is as efficient as possible. By offering a clear demonstration of the potential performance gains, PyPIM makes a compelling case for the adoption of in-memory computing. This practical approach helps bridge the gap between experimental research and real-world application, making the benefits of in-memory computing accessible to a wider audience.

Demonstrated Efficiency Gains

Demonstrations of mathematical and algorithmic computations using PyPIM show significant performance improvements, highlighting the practical benefits of this new approach. By processing data directly within memory, the PyPIM platform significantly reduces the energy and time needed for data transfer, resulting in overall performance gains.

These efficiency gains are not merely theoretical but have been validated through practical experimentation. Tests conducted with PyPIM have shown marked improvements in processing speeds and energy consumption, making it a viable option for a range of applications. The ability to perform calculations directly within memory units reduces the latency associated with data transfer, leading to faster and more efficient computation. This enhanced performance can be particularly advantageous in scenarios where speed and energy efficiency are critical, such as in data centers and large-scale computational projects.

Future Implications and Potential Applications

Broadening the Scope of In-Memory Computing

As more research groups and companies explore and refine in-memory computing techniques, we may witness a significant paradigm shift in computer architecture and performance capabilities. The implications of this research are vast, with potential applications spanning various fields. The PyPIM platform could potentially revolutionize computing by enhancing processing efficiency and reducing energy consumption through an innovative in-memory computing approach.

The adoption of in-memory computing could lead to the development of more advanced and efficient processors, capable of handling increasingly complex computational tasks. As the technology matures, we can expect to see its application in a broader range of industries, from healthcare and finance to entertainment and telecommunications. PyPIM represents the first step toward this future, providing a foundation on which further innovations can be built. As more organizations adopt in-memory computing, the cumulative effect could be a substantial increase in overall computational capacity, enabling new possibilities in data processing and analysis.

Encouraging Further Research and Development

In a pioneering leap for computer architecture, researchers at Technion—Israel Institute of Technology have unveiled a software package that could utterly transform how computers handle data. This cutting-edge innovation, dubbed PyPIM (Python Processing-in-Memory), facilitates in-memory computing. This allows computers to carry out processing operations directly within their memory units, rather than shifting data back and forth between the CPU and memory. This technological breakthrough significantly cuts down on both energy consumption and processing time, leading to much more efficient and faster computing processes. By reducing the dependency on traditional data transfers, PyPIM not only speeds up computations but also conserves valuable energy, addressing a major challenge in current computing systems. This new approach holds the potential to propel a wide array of applications—from artificial intelligence to large-scale data analysis—into new heights of performance and efficiency. As computers increasingly become an integral part of our daily lives, such advancements bode well for future technological progress.