The silent accumulation of data within an application’s digital exhaust pipes often goes unnoticed until the precise moment a system-wide failure cascades through production environments. At that critical juncture, engineering teams are thrown into a frantic search for answers, only to discover that the very records meant to illuminate the problem are a tangled, context-free mess. This common scenario transforms what should be a company’s greatest diagnostic tool into its most significant operational burden. The distinction between insightful logging and mere data hoarding is not a trivial technical detail; it is a fundamental business imperative that directly influences downtime, engineering costs, security posture, and the ability to innovate safely. When logs fail, resolution times stretch from minutes to hours, developer burnout escalates, and hidden vulnerabilities fester, posing a direct threat to revenue and reputation.

The 3:00 a.m. Outage When Your Logs Fail Their Only Job

The jarring sound of a pager alert in the dead of night signals more than just a technical failure; it marks the beginning of a high-stakes race against time. For the on-call engineer, the first line of defense and the primary source of hope is the application’s logs. The expectation is a clear, chronological record of events that can pinpoint the exact moment things went wrong. This initial optimism, however, frequently gives way to a sense of dread as the reality of the situation becomes clear.

Instead of a precise forensic trail, the engineer is often confronted with an undifferentiated wall of text. Ambiguous messages like “Request processed” or “Null pointer exception” appear thousands of times, stripped of any useful context that might explain their cause or impact. Sifting through this digital noise during a live incident is a deeply inefficient and stressful process. Each minute spent deciphering cryptic entries is a minute of system downtime, a minute of eroding customer trust, and a minute closer to significant financial loss. This experience exposes a harsh truth: the logs are not aiding the investigation; they are actively hindering it.

This moment of crisis forces a difficult but necessary question upon any organization: are the logs a meticulously maintained asset, capable of reconstructing events with surgical precision, or are they an unmanaged liability? The answer separates organizations that are in control of their complex systems from those that are merely reacting to them. An asset provides clarity and accelerates resolution, while a liability consumes valuable resources, prolongs outages, and obscures the very information needed to prevent a recurrence.

Beyond the Console Why Strategic Logging is Non-Negotiable

Effective logging serves a purpose that extends far beyond troubleshooting production failures. It is the definitive “black box recorder” for an application, capturing the essential evidence needed for the entire software lifecycle. From debugging new features in development and auditing security events to analyzing performance trends and deriving business insights, well-structured logs provide the ground truth for how a system behaves under real-world conditions. Without this comprehensive narrative, teams are left to operate on assumptions and guesswork.

The consequences of neglecting a strategic approach to logging manifest as direct and indirect business costs. The most visible cost is an extended Mean Time To Resolution (MTTR) during outages, which has a clear and immediate impact on revenue and customer satisfaction. However, the hidden costs are equally damaging. The chronic stress and frustration of navigating useless logs during high-pressure situations is a major contributor to developer burnout and employee turnover. Furthermore, poorly monitored systems can harbor security vulnerabilities that go unnoticed until they are exploited, leading to potentially catastrophic data breaches.

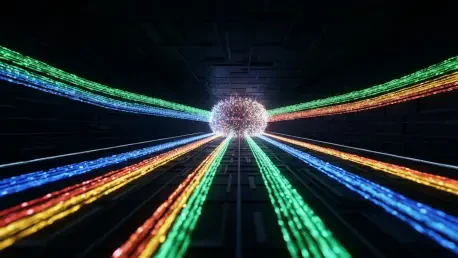

These challenges are exponentially magnified by the complexity of modern software architectures. The shift toward distributed systems, microservices, and serverless functions has fragmented applications into hundreds of independent, communicating components. In such an environment, a single user request may traverse dozens of services, making it impossible to understand system behavior by examining logs in isolation. A centralized, correlated source of truth is no longer a “nice-to-have” but an absolute prerequisite for maintaining operational awareness and control.

The Anatomy of Asset-Grade Logging Core Principles for Clarity and Insight

The most fundamental transformation in modern logging is the shift from unstructured text walls to structured, machine-readable data, typically in a format like JSON. An unstructured message such as “database connection failed” is readable by a human but opaque to analysis tools. In contrast, its structured equivalent—{"level": "error", "message": "database connection failed", "service": "auth-api", "db_host": "prod-db-1"}—turns logs into a queryable dataset. This simple change unlocks the ability to filter, search, aggregate, and visualize log data with immense power, turning a simple text viewer into a sophisticated diagnostic database.

Managing the signal-to-noise ratio is essential for making logs useful, especially during a crisis. This is the primary role of log levels. By categorizing events using standardized levels such as INFO (routine business operations), WARN (potential issues that do not disrupt service), ERROR (functional failures requiring attention), and FATAL (critical crashes), teams can instantly assess urgency. In production, an application might run at the INFO level to reduce noise, but engineers must have the ability to dynamically increase verbosity to a DEBUG or TRACE level to hunt down elusive bugs without requiring a new deployment.

A log entry without context is an incomplete story. To be truly valuable, every log must answer the crucial questions of who, what,where, and why. This means enriching logs with essential contextual data points. A unique trace ID is indispensable for following a single request as it travels across multiple microservices. Including user or session IDs helps in replicating user-specific problems. Capturing the system state at the time of the event, along with the full error details and stack trace, provides the complete picture needed for rapid and accurate diagnosis.

This principle of providing a complete narrative finds its expression in the concept of a “canonical log line”—a single, comprehensive entry generated at the conclusion of a process that summarizes the entire operation. It captures the inputs, outcomes, duration, and any errors encountered. In the world of distributed systems, this idea evolves into distributed tracing, often implemented using open standards like OpenTelemetry. Tracing stitches together the individual operations (spans) from every service involved in a request, creating a cohesive and detailed view of the entire journey. This provides unparalleled insight into system performance and failure modes.

Finally, a mature observability strategy recognizes the distinct roles of different data types. A common mistake is to rely solely on logs for monitoring. Logs are ideal for investigating discrete events—the “what happened” for a specific transaction. Metrics, on the other hand, are aggregates that describe system behavior over time—the “how often” and “how fast.” Metrics are used for building dashboards and setting alerts that signal a problem (e.g., “the error rate has increased by 50%”). Once alerted, teams then turn to the detailed, context-rich logs to understand why the problem is occurring.

Learning from Costly Mistakes Security and Performance Realities

High-profile security incidents at major technology companies have provided the entire industry with stark, invaluable lessons on the dangers of improper logging. The accidental logging of sensitive information such as plain-text user credentials, personally identifiable information (PII), or internal API keys can bypass other security controls and lead to devastating breaches. These events serve as a powerful reminder that logs are often a treasure trove of sensitive data and must be treated with the same rigor as a production database.

This reality necessitates a strict, non-negotiable policy on what data must never be logged. This includes passwords, authentication tokens, session cookies, API keys, credit card numbers, and any other data classified as sensitive or regulated. The best strategy is a proactive one, implementing automated redaction within the logging library or pipeline to catch and scrub sensitive data patterns before they are ever written to storage. This technical control must be paired with strong governance, including encrypting logs both in transit and at rest and enforcing strict Role-Based Access Control (RBAC) to ensure that only authorized personnel can access them.

Beyond security, logging carries a hidden performance tax that can impact application responsiveness. Every log statement consumes CPU cycles, memory, and I/O bandwidth. In high-throughput systems, verbose or inefficiently implemented logging can become a significant performance bottleneck, slowing down critical operations. Mitigating this impact requires a conscious effort, including choosing highly optimized, asynchronous logging libraries, implementing intelligent sampling on high-traffic code paths, and incorporating the logging configuration into regular performance and load testing to identify and resolve bottlenecks before they affect users.

A Practical Framework for Transforming Your Logging Strategy

A successful logging transformation begins not with tools, but with clear objectives. The antiquated approach of “log everything” is both expensive and ineffective, creating a sea of noise that makes finding valuable signals nearly impossible. Instead, a modern strategy starts by defining what needs to be understood. This involves identifying the critical operational paths, key business transactions, and service-level objectives (SLOs) that matter most. Logging then becomes a targeted activity designed to provide the specific data needed to monitor, debug, and secure these vital functions.

With objectives defined, the next operational imperative is to centralize all log data. In a distributed architecture, logs are generated across countless services, containers, and infrastructure components. Attempting to troubleshoot an issue by manually accessing dozens of different log sources is a recipe for failure. By aggregating all logs into a single, unified platform, teams gain the ability to search and correlate events across the entire technology stack. This cross-system visibility is crucial for uncovering complex root causes, such as how a performance degradation in a downstream database led to a cascade of timeouts in upstream services.

As log volume scales, cost management becomes a critical concern. Storing and indexing terabytes of data can become prohibitively expensive. Intelligent log sampling provides a powerful lever for controlling costs without sacrificing essential insights. This technique involves retaining 100% of high-value logs, such as errors and warnings, while storing only a statistically significant percentage of high-volume, low-value logs, like successful health checks. This is complemented by tiered retention policies that automatically migrate logs from expensive, high-performance “hot” storage to cheaper “cold” storage and eventually to archival tiers, balancing accessibility with cost-effectiveness.

Finally, a robust logging framework must be secure by default. This means embedding security practices directly into the logging pipeline rather than treating them as an afterthought. Modern logging libraries and aggregation tools provide powerful capabilities for proactively filtering and redacting sensitive data before it ever leaves the application environment. By building a secure-by-default pipeline, organizations can enforce compliance policies programmatically, automate the protection of sensitive information, and drastically reduce the risk of accidental data exposure through logs.

The journey from chaotic, reactive logging to a strategic, asset-grade observability practice was a defining characteristic of resilient engineering organizations. It was a transformation that revealed that the true value was not merely in faster troubleshooting, but in the profound cultural shift it enabled. Teams that mastered their operational data developed a deeper, more intuitive understanding of their complex systems. This fostered a proactive mindset, where the focus shifted from simply reacting to failures to anticipating and preventing them. This foundational clarity became the bedrock upon which more advanced capabilities, such as AIOps and predictive failure analysis, could be reliably built. They had learned a fundamental lesson: one could not hope to predict the future of a system whose present could not even be properly observed.