Scaling modern applications often involves a delicate balancing act between performance, availability, and infrastructure cost, a challenge particularly evident when architecting distributed in-memory data stores like Redis. While Redis is celebrated for its exceptional speed and versatility, its fundamentally single-threaded nature introduces a critical inefficiency: on a multi-core virtual machine, a standard Redis process utilizes only a single CPU core, leaving the remaining compute power dormant and leading to significant resource underutilization. This limitation forces many organizations into a costly compromise, where achieving high availability and write scalability through traditional clustering methods requires deploying a large fleet of virtual machines, driving up operational overhead. However, a more strategic approach to cluster topology, one that intelligently leverages containerization and CPU-aware orchestration, can resolve this conflict. By aligning the deployment architecture with Redis’s core behavior, it is possible to build a robust, horizontally scalable cluster that maximizes hardware efficiency, reduces infrastructure footprint, and delivers high throughput without the exorbitant expense typically associated with such setups.

1. Exploring Common Redis Deployment Models

In a conventional Redis deployment designed for high availability, the master-replica topology serves as a foundational model. This configuration designates a single master node as the authoritative source for all write operations, while one or more replica nodes asynchronously duplicate the master’s dataset. The primary benefit of this approach is its inherent resilience; should the master node fail, a replica can be automatically promoted to take its place, ensuring minimal service disruption. Furthermore, this model facilitates read scaling and load distribution by allowing client applications to direct read queries to the replicas, thereby offloading the master and improving overall responsiveness. Despite these advantages, the standard master-replica architecture has a significant limitation in write-intensive scenarios. Since all write commands must be processed by the single master, it becomes a performance bottleneck that cannot be easily scaled horizontally. Compounding this issue is the inefficient use of modern hardware. Because each Redis process is single-threaded, deploying a master or replica on a multi-core virtual machine results in wasted CPU cycles, as the process can only ever consume one core, regardless of the available capacity.

To overcome the write scalability limitations of the single-master setup, a multi-master cluster architecture is frequently adopted. This distributed model partitions the entire dataset across multiple master nodes, a process known as sharding, where each master is responsible for a specific subset of hash slots. This design allows write operations to be distributed across several nodes simultaneously, dramatically increasing the system’s aggregate write throughput. Each master node typically has its own set of replicas to maintain high availability, ensuring that the failure of a single master only impacts a fraction of the dataset and can be managed through automated failover. While this approach effectively solves the write bottleneck problem and provides excellent fault isolation, it introduces considerable infrastructure costs and operational complexity. For instance, a modest cluster with three masters, each with two replicas for redundancy, would necessitate nine separate virtual machines. Managing this expanded fleet involves greater overhead for patching, security, and monitoring. Furthermore, maintaining an even distribution of data across the shards can be challenging, as an imbalanced key distribution may lead to performance hotspots where one master becomes overloaded while others remain underutilized.

2. Implementing an Optimized Containerized Architecture

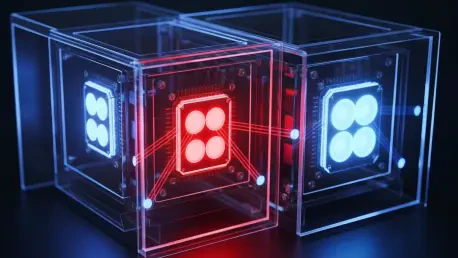

A more cost-effective and resource-efficient alternative to traditional deployments is a containerized architecture that consolidates Redis instances onto fewer, more powerful virtual machines. This optimized strategy leverages a container orchestration platform like Docker Swarm to manage Redis processes and employs CPU pinning to ensure each process runs on a dedicated CPU core, thereby fully harnessing the underlying hardware. A practical blueprint for this model involves three virtual machines, each equipped with four CPU cores. A Docker Swarm is configured across these three nodes, creating a unified cluster. On each VM, three Redis containers are deployed: one container serves as a unique master node for that specific machine, while the other two function as replicas for the master nodes running on the other two VMs. This topology creates a resilient three-master, two-replica cluster using only three host machines instead of nine. The CPU allocation on each VM is meticulously planned: one core is dedicated to its master Redis process, two cores are assigned to the two replica processes, and the final core is reserved for system operations and the Docker daemon. This approach achieves the same high availability and write throughput as a sprawling multi-master setup but with a drastically reduced infrastructure footprint.

The implementation of this CPU-aware Redis cluster begins with establishing the foundational infrastructure. First, a Docker Swarm is initiated, with one of the three virtual machines acting as the manager and the other two joining as workers, forming a cohesive orchestration environment. Next, a Docker overlay network is created with the --attachable flag, enabling both Swarm services and standalone containers to connect and communicate across the different hosts. Proper network configuration is critical, so firewall rules on all nodes must be adjusted to open the standard Redis client port (6379) as well as the unique cluster bus ports required for inter-node communication (e.g., 16379, 16380, and 16381). With the networking in place, the Redis containers can be launched on all three VMs. Each container is configured to join the previously created overlay network and expose its respective ports. Once all containers are running and accessible via their IP addresses on the overlay network, the Redis cluster can be created. This is accomplished by executing the redis-cli --cluster create command from within any of the Redis containers, providing the IP addresses and ports of all master and replica nodes. The --cluster-replicas 2 argument instructs Redis to create a cluster where each master has two replicas. After the command completes, the cluster’s status can be verified using redis-cli cluster nodes to confirm that all nodes have joined and the hash slots have been distributed correctly.

3. Validating Performance and Acknowledging Limitations

Performance validation of the optimized cluster topology confirmed its efficiency and effectiveness in utilizing system resources. Using a standard Python-based load testing script, tests demonstrated that each Redis master process consistently consumed a single dedicated CPU core while handling write operations. The load was evenly distributed across all three masters, validating the sharding mechanism and proving that no single node became a bottleneck. Concurrently, the replica processes utilized their own assigned cores to handle replication tasks without interfering with the masters or the host system’s operations. A direct comparison between this optimized setup and a traditional nine-VM deployment revealed stark differences in resource allocation. The conventional architecture, with its nine nodes and approximately 18 total CPUs, delivered high throughput but at a significantly higher infrastructure cost and with widespread CPU underutilization on each machine. In contrast, the optimized three-VM deployment, utilizing only 12 total CPUs, achieved comparable throughput and fault tolerance. This efficient core allocation not only reduced hardware requirements by a third but also lowered associated costs related to licensing, power, and maintenance, proving that intelligent design can deliver performance without over-provisioning.

Despite its significant advantages in cost and efficiency, this consolidated topology is not without its trade-offs and practical constraints. Although CPU cores are pinned to individual Redis processes, other system resources like network bandwidth and memory I/O are still shared at the virtual machine level. Under extremely heavy workloads, this can lead to resource contention, particularly during high-volume replication bursts or when persistence mechanisms like RDB snapshotting or AOF logging are active. Furthermore, the recovery process can be more complex. While a dedicated setup might offer faster, more straightforward automated failover, a failure of a container or an entire node in this consolidated model may require manual intervention or careful orchestration by Docker Swarm to rebalance and maintain the precise master-replica pairings across the remaining hosts. Operational visibility also becomes more challenging, as monitoring multiple critical Redis containers on a single VM demands a robust observability stack. Tools like Prometheus and Grafana become essential for aggregating metrics, logs, and alerts to provide a clear picture of the health and performance of each individual instance. Finally, if data persistence is enabled and multiple containers share the same underlying storage device, disk I/O could become a significant bottleneck, potentially impacting latency and overall cluster performance.

4. An Advanced Blueprint for Redis Deployment

The analysis of this containerized, CPU-aware architecture demonstrated a superior approach to deploying a multi-master Redis cluster. It was proven that by leveraging container orchestration and precise CPU pinning, the single-threaded nature of Redis could be transformed from a resource-wasting limitation into a design advantage. The implementation successfully achieved high throughput and robust fault tolerance while operating on a significantly smaller infrastructure footprint than traditional deployment models. This topology established that intelligent architectural design could effectively decouple performance from excessive infrastructure costs. The result was a practical and maintainable blueprint that provided a balanced solution, meeting the demanding requirements of modern applications for speed and availability without compromising on fiscal responsibility or operational efficiency. This methodology offered a clear path for engineers to build more sustainable and cost-effective systems.