Meta AI has introduced a groundbreaking artificial intelligence approach named SWE-RL, poised to enhance the reasoning capabilities of large language models (LLMs) for real-world software engineering tasks. This innovative method leverages data from GitHub pull requests to train the AI, enabling it to handle complex codebases and subtle issues that traditional AI tools often miss. By focusing on the intricacies and lifecycle of software projects, SWE-RL aims to provide a comprehensive and efficient solution for the challenges faced by software engineers.

Revolutionizing AI with Real-World Data

Leveraging GitHub Pull Requests

SWE-RL begins by collecting comprehensive data from GitHub pull requests, including sources such as GHArchive and direct repository clones. This collected data is meticulously refined to remove noise, such as bot-generated and non-informative modifications, ensuring high-quality training examples. This step is critical because noisy data can compromise the model’s ability to learn effectively, leading to less optimal solutions. By focusing on high-quality data, SWE-RL ensures that the model is exposed to relevant and instructive code changes that truly reflect the complexities of real-world software development.

The data collection process is just the starting point. SWE-RL goes deeper by understanding the complete lifecycle of software projects, encompassing detailed issue descriptions, code snapshots, and corresponding fixes. This holistic view enables the AI to gain insights into the development process, from identifying problems to implementing and verifying solutions. This comprehensive approach ensures that SWE-RL can provide meaningful assistance in various stages of software engineering, making it a valuable tool for developers.

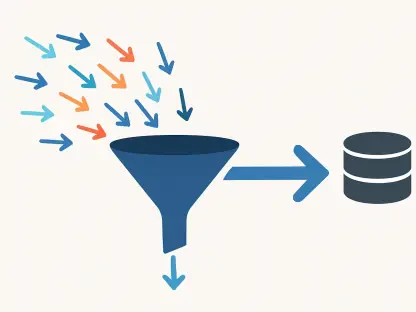

Reward Function Innovation

A significant innovation in SWE-RL is its rule-based reward function. Traditional AI models often rely on a binary pass/fail system, which can be limiting and doesn’t capture the subtleties of software development. Instead, SWE-RL uses Python’s difflib.SequenceMatcher to provide a similarity score between the generated patch and a known good solution. This nuanced feedback acknowledges partial successes and enables continuous improvement, allowing the AI to iteratively refine its solutions and improve over time.

The reward function also applies penalties for patches that do not meet established standards, ensuring both semantic correctness and proper coding style are maintained. This dual-layered feedback mechanism is essential for developing robust and maintainable software. By discouraging subpar solutions and rewarding incremental improvements, SWE-RL fosters a more productive and learning-focused environment for the AI. As a result, the model becomes more adept at generating high-quality code that adheres to best practices.

Advanced Reinforcement Learning Techniques

Group Relative Policy Optimization (GRPO)

SWE-RL employs Group Relative Policy Optimization (GRPO) for reinforcement learning, which compares multiple generated outputs for the same problem and encourages the model to explore different solutions. This approach fosters deeper reasoning and thoughtful decision-making, as the AI is not confined to a single solution but is instead encouraged to consider various possibilities. By evaluating and learning from multiple potential solutions, the AI can develop a more robust understanding of the problem space, leading to more effective and innovative solutions.

GRPO plays a crucial role in balancing exploration and exploitation, which is a fundamental challenge in reinforcement learning. By comparing multiple solutions, the AI can identify and prioritize the most promising approaches while still exploring new possibilities. This dynamic learning process ensures that the AI can adapt to different challenges and continuously improve its problem-solving capabilities. The result is a more versatile and effective AI that can handle a wide range of software engineering tasks.

Training on Robust Models

By training on robust models like Llama-3.3-70B-Instruct with GRPO, SWE-RL helps the AI internalize deliberate problem-solving strategies. This training approach enhances the model’s ability to tackle complex software issues by exposing it to a diverse set of challenges and solutions. The combination of a powerful base model and advanced reinforcement learning techniques ensures that SWE-RL can deliver high performance on software engineering tasks while also improving its capabilities in out-of-domain areas such as general language understanding and mathematical reasoning.

Training on robust models provides SWE-RL with a strong foundation for learning and adaptation. The use of large-scale, high-quality data combined with advanced reinforcement learning techniques enables the AI to develop a deep and nuanced understanding of software engineering. This comprehensive training approach ensures that the model is not only capable of addressing specific problems but can also generalize its knowledge to new and unfamiliar tasks. As a result, SWE-RL becomes a valuable tool for developers across a wide range of applications, from software development to broader AI tasks.

Benefits and Performance

Enhanced Software Engineering Capabilities

The use of real-world data and nuanced feedback enables SWE-RL to better manage the complexities of everyday software engineering tasks. By incorporating detailed information from GitHub pull requests and leveraging advanced reinforcement learning techniques, SWE-RL can address both the technical and contextual aspects of software development. This approach ensures that the AI can provide functional and well-formatted solutions that adhere to coding standards and best practices, making it a reliable and valuable assistant for developers.

SWE-RL’s ability to handle real-world software engineering tasks is further enhanced by its comprehensive understanding of the development lifecycle. By learning from detailed issue descriptions, code snapshots, and corresponding fixes, the AI can provide meaningful insights and solutions at various stages of the development process. This holistic approach ensures that SWE-RL can contribute to both the identification and resolution of issues, making it a versatile and effective tool for developers.

Promising Solve Rates

The refined model Llama3-SWE-RL-70B has demonstrated a 41.0% solve rate on SWE-bench Verified, a benchmark consisting of real-world GitHub issues. This performance highlights the potential to rival larger proprietary systems, showcasing SWE-RL’s ability to effectively tackle complex software engineering tasks. The model’s impressive solve rate is a testament to the effectiveness of its innovative data collection, reward function, and reinforcement learning techniques, which together enable it to deliver high-quality solutions.

Scaling analyses indicate that increasing the number of repair samples and reproduction tests leads to significant performance improvements, though gains eventually plateau. This suggests that while additional data and testing can enhance the model’s capabilities, there are diminishing returns beyond a certain point. Nonetheless, the use of advanced techniques like GRPO has facilitated “aha moments,” where the model adjusts its reasoning strategies and gains deeper insights into problem-solving. These breakthroughs contribute to the model’s overall effectiveness and highlight the potential for further advancements in AI-driven software engineering.

Generalization to Broader Tasks

Beyond Software Issue Resolution

The model’s improved performance extends beyond software issue resolution to tasks such as function coding, library usage, and mathematical reasoning. This generalization suggests that reinforcement learning on software data fosters broader reasoning skills, enabling the AI to tackle a wide range of challenges in various domains. By leveraging its comprehensive understanding of software development, SWE-RL can apply its knowledge and problem-solving strategies to new and unfamiliar tasks, making it a versatile and valuable tool for developers.

SWE-RL’s ability to generalize its knowledge to broader tasks is a significant advantage, as it demonstrates the model’s potential to contribute to various aspects of software engineering and beyond. By continuously learning and adapting to new challenges, the AI can expand its capabilities and provide meaningful assistance across a wide range of applications. This versatility ensures that SWE-RL remains relevant and valuable in an ever-evolving technological landscape, where new challenges and opportunities constantly emerge.

Future Research Directions

Meta AI has introduced a groundbreaking innovation in artificial intelligence called SWE-RL, designed to significantly improve the reasoning abilities of large language models (LLMs) specifically for software engineering tasks. This new technique takes advantage of data sourced from GitHub pull requests to train the AI, which allows it to manage complex codebases and identify subtle problems that traditional AI tools frequently overlook. By honing in on the detailed aspects and lifecycle of software projects, SWE-RL aspires to deliver a thorough and streamlined solution to the challenges that software engineers routinely face. This development could revolutionize the way AI assists in software engineering, ensuring more efficient handling of code, bug fixes, and updates. Meta AI’s innovative approach aims to push the boundaries of what AI can achieve by offering engineers a powerful tool for optimizing software development processes, thereby raising the bar for industry standards in efficiency and precision.