The burgeoning impact of open source models within the realm of artificial intelligence (AI) has ignited passionate debates among tech companies, academics, and policymakers. As generative AI technology continues to advance at an unprecedented rate, discussions surrounding the benefits and risks of open source software have become increasingly critical. Chinese AI startup, DeepSeek, has recently thrust itself into the spotlight by releasing a revolutionary model that reportedly rivals top-tier American AI software at a fraction of the cost. This development underscores the heightened stakes involved in the ongoing debate and intensifies the discourse surrounding open AI models.

Definition and Criteria of Open Source AI

Open source software, including AI models, is characterized by its freely accessible source code which can be used, modified, and distributed by anyone. The Open Source Initiative (OSI) sets specific terms for distribution and access, ensuring that software must meet these criteria to be truly considered open source. In the context of AI, being open source involves not only providing the source code but also offering detailed training data and allowing extensive usage and modification. This level of openness contrasts sharply with closed source systems, where developers maintain tight control over the software, often limiting modifications and offering less transparency.

While many prominent tech companies, such as Meta Platforms Inc. and French startup Mistral, label their AI models as open source, the degree to which they adhere to true open source standards varies significantly. Companies like Meta often release models termed “open weight models,” meaning they provide the model and some source code along with the model’s weights, but typically omit detailed information about the training data used. For instance, Meta’s Llama series has faced criticism from the OSI for its restrictive licensing terms and lack of comprehensive training data details. Similarly, DeepSeek’s recent release, the R1 model, did not include the code or the training data, raising questions about the true openness of their model and highlighting the inconsistencies in what various developers define as open source.

Transparency and Access Among Top AI Developers

Transparency and access are crucial factors in the open source debate, as the level of openness promised by companies often falls short of expectations. While some tech giants, including Meta, claim to offer open source models, the reality is that they frequently provide limited access to critical components. For instance, Meta’s Llama series does offer the model’s weights and some source code but lacks detailed information about the training data, leading to scrutiny and criticism from organizations like the OSI. This discrepancy highlights the need for clearer definitions and consistent standards within the open source AI community.

DeepSeek’s R1 model release has similarly sparked questions about transparency. The absence of provided code or training data raises significant concerns regarding the model’s openness. These omissions are not merely technicalities; they undermine one of the core principles of open source software, which is supposed to empower developers with a thorough understanding of the AI model’s operations. The inconsistency in transparency and access across prominent AI developers underscores the need for stringent guidelines to ensure genuinely open and accessible AI. Clearer definitions and better adherence to established standards will be crucial in fostering trust and collaboration within the AI community.

Benefits and Advocacy for Open Source

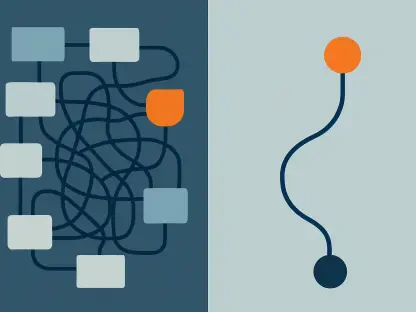

Proponents of open source AI models champion their potential to democratize technology and spur innovation through reduced costs and widespread accessibility. By eliminating licensing fees, open source software makes advanced AI tools more affordable, fostering broader adoption and enabling smaller players to compete in the AI field. This openness also enhances accountability, as external developers can scrutinize and understand the model’s operations, ensuring a level of transparency that closed systems inherently lack.

Aaron Levie, CEO of Box Inc., highlights the risks of market monopolization by powerful companies that control closed systems. He argues that dominant AI providers could marginalize developers and inhibit broader ecosystem growth by monopolizing the economic benefits. For companies like Meta, adopting open source practices is a strategic move to encourage widespread use of their software, thus amplifying their influence within the AI community. This approach not only democratizes access to cutting-edge technology but also cements these companies’ positions as pivotal players in the AI landscape. The widespread use of open source AI can significantly contribute to innovation, as developers and researchers from diverse backgrounds can collaborate and build upon existing models to create new and improved applications.

Risks Associated with Open Systems

Despite the many advantages of open source AI, critics point to several significant risks associated with open systems, one of the most prominent being security concerns. In the U.S., there is apprehension about adopting AI technology from geopolitical rivals such as China due to potential national security risks and surveillance issues. The sharing of open or semi-open AI models could provide competitive advantages to countries striving to surpass U.S. leadership in technology, leading to a strategic imbalance.

The potential for misuse of open source AI models also cannot be ignored. Open systems, by their very nature, can be exploited by malicious actors for harmful purposes, leading to unintended and possibly dangerous consequences. This risk underscores the importance of implementing robust security measures and regulatory oversight to ensure the safe and responsible use of open source AI technologies. Balancing the benefits of open source AI with the need for security and control remains a significant challenge for both developers and policymakers. As the debate continues, it is clear that a nuanced approach is necessary to navigate the complex landscape of open source AI while mitigating the associated risks.

DeepSeek’s Motivation and Strategic Adoption of Open Source

DeepSeek’s adoption of an open approach, albeit with some limitations, reflects a strategic effort to mitigate concerns over China’s control and broaden its market reach, particularly in Western markets. This strategy is reminiscent of Meta’s approach, which has successfully captured a substantial share of the AI ecosystem through open-source practices. Meta CEO Mark Zuckerberg has emphasized the competition with China, advocating for American-developed open models to maintain a geopolitical advantage. This competitive landscape is driving companies like DeepSeek to leverage open source practices to forge a strong presence in the global AI market.

By offering its model at a fraction of the cost of its American counterparts, DeepSeek aims to attract a wide range of users and developers. This approach not only enhances its market presence but also fosters innovation and collaboration within the AI community. DeepSeek’s relatively open approach is a calculated move designed to build trust and credibility while positioning itself as a significant player in the AI industry. By providing an affordable alternative to top-tier American AI software, DeepSeek is poised to make a substantial impact on the AI landscape and encourage broader adoption and development.

Technological Innovation and Efficiency

DeepSeek’s R1 model stands out for its innovative approach to efficiency. Unlike its U.S. counterparts, which rely on vast numbers of high-powered chips, DeepSeek’s team utilized a restricted number of less advanced chips. They employed reinforcement learning, a technique that trains the system by rewarding correct answers and punishing incorrect ones. This innovative method of using limited resources has garnered attention and speculation about whether DeepSeek exploited Western technology to cut development costs. Some, including OpenAI, have accused DeepSeek of employing “distillation,” a controversial practice that copies the efficacy of more powerful models.

Distillation involves using the outputs of one AI model to train a smaller, less potent model to achieve similar capabilities. While this method can be highly effective, it raises legal and ethical questions, especially regarding the infringement of proprietary technologies. OpenAI, for example, insists that using their models’ outputs to train competitors is a violation of their usage terms. Ongoing investigations are exploring whether DeepSeek’s advancements involved such practices, and if so, whether they constituted an infringement on proprietary AI technologies. This controversy underscores the complex legal and ethical landscape surrounding the development and deployment of AI models and highlights the need for clear guidelines and enforcement mechanisms to protect proprietary technologies and foster fair competition.

Governmental Perspectives and Regulatory Stances

The growing influence of open source models in the field of artificial intelligence (AI) has sparked passionate debates among tech companies, academics, and policymakers. As generative AI technology evolves at an unprecedented pace, the discussions about the advantages and risks of open source software become even more significant. Recently, the Chinese AI startup DeepSeek has thrust itself into the spotlight by launching an innovative model that reportedly matches the performance of leading American AI software but at a much lower cost. This development highlights the increasing stakes in the ongoing debate and amplifies the conversation about open AI models.

DeepSeek’s entry into the high-stakes AI race underscores the potential for open source models to disrupt the existing market and democratize access to cutting-edge technology. Critics argue that open source models could pose security risks and expose vulnerabilities, while proponents believe that open access accelerates innovation and leads to more robust and versatile AI solutions. As AI technology continues to expand, the global conversation about open source models will likely intensify, shaping the future of artificial intelligence development and deployment.